Unveiling MLOps: The Backbone of AI and Machine Learning Operations

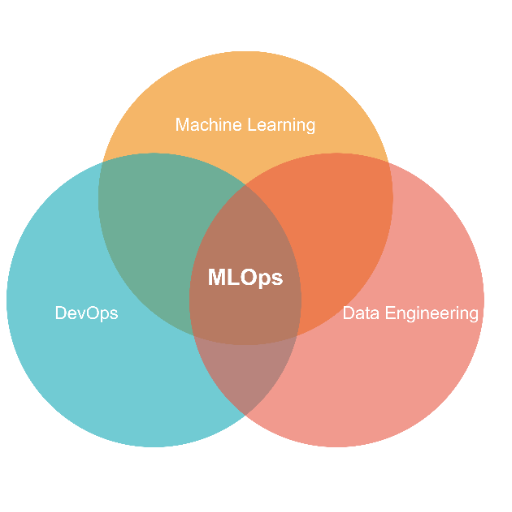

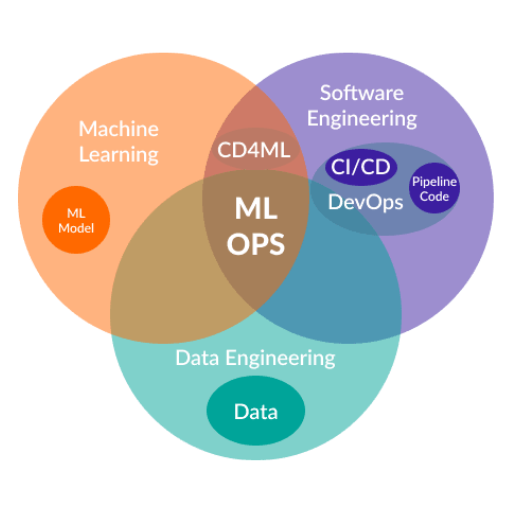

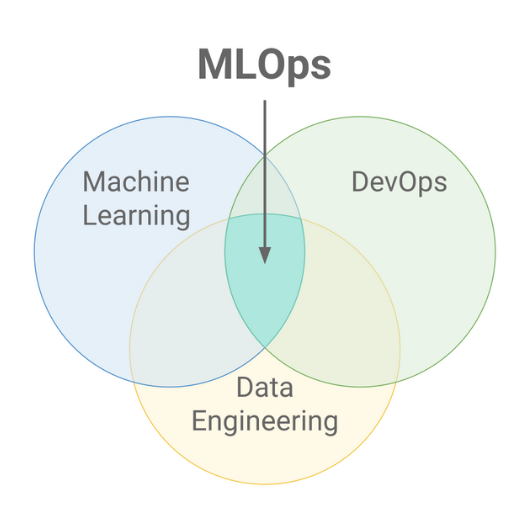

The growth and development of artificial intelligence (AI) and machine learning (ML) are creating greater complexity within these domains, and there is an increasing demand for appropriate solutions to manage their scalability. MLOps is an essential component of AI architecture that integrates the three pillars of data science, engineering, and business operations where data science and engineering’s boundaries fade. This article explains what MLOps is its components, and its relevance in current AI workflows. It also describes how MLOps can solve the issues of scalability, reproducibility, and collaboration. In the end, the reader is expected to appreciate the concepts of MLOps and its importance in successfully implementing AI and ML projects.

What is MLOps and Why Do We Need It?

MLOps, or Machine Learning Operations, refers to practices that unify machine learning development and operations. MLOps seeks to reduce the complexity of deployment, monitoring, and managing machine learning models after they have been created. MLOps addresses issues including embedding machine learning models into operational frameworks, maintaining quality assurance, and scalable operations. The importance of MLOps comes from its ability to dissolve both operational and data science silos by ensuring that trained models can be deployed efficiently, results reproduced, and collaboration encouraged across various teams. MLOps also minimizes resource expenditure and risk during AI advancement efforts’ enabling organizations to shift AI projects into production and use on real data and business processes.

Understanding the MLOps Definition

MLOps focuses on easing the development, deployment, and maintenance process for machine learning models. It stands for Machine Learning Operations and aims to ensure these models can be integrated into the needed production systems efficiently and scalably. It tries to resolve issues such as monitoring a model, versioning it, and retraining it to ensure optimal performance over time. It uses DevOps and data science concepts to enable organizations to apply AI practically while enhancing collaboration between the data workforces and IT professionals. MLOps assists businesses in fostering innovation while simplifying the management of machine learning workflows in real-life situations.

The Role of MLOps in AI

MLOps greatly improves AI systems' deployment, scaling, and operational efficiency. It offers a systemic solution to the disconnect between a developed model and a production system by providing integration, monitoring, and support services. Some critical parameters that fall under the MLOps umbrella are:

- Model Versioning: Recording experiments and versions through management systems like DVC or MLflow allows changes to be made, rolled back, or recreated.

- Automated Pipelines: Setting up Continuous Integration/Continuous Deployment (CI/CD) infrastructure for training, evaluation, and deployment of models increases effectiveness and uniformity.

- Model Monitoring: Implementing analytics for accuracy, precision, recall, and drift, among other measurements of deployed models, makes it possible to discover and address problems or decline in quality.

- Infrastructure Automation: Using containerization (like Docker) and orchestration (like Kubernetes) for reproducible and scalable infrastructure automation.

- Data Management: Automating data handling by validating and preprocessing for reliable input integration.

- Collaboration Tools: Platforms designed to improve interactions among team users, which may also include versioning and issue-tracking platforms.

The highlight of these parameters is the integration of agility, scaling, and reliability in real-life applications, which makes MLOps perfect for overcoming the challenges singled out in operationalizing AI systems.

How MLOps Provides Benefits to Organizations

MLOps offers excellent benefits to businesses because it helps deploy and maintain machine learning models. Here are some advantages:

- Increased Scalability: Using containerization applications like Docker and Kubernetes enhances the ability of the MLOps models to scale due to changing requirements without affecting the speed or quality of performance.

- Improved Cooperation: MLOps allows smooth cooperation between data scientists, DevOps, and engineers due to version control tools (Git) and continuous integration and delivery (CI/CD) systems.

- Quick Market Delivery: Automated pipelines for model training, validation, and deployment ease the process of iteration, optimizing businesses' efficiency in deploying AI solutions.

- Model Surveillance and Support: Continuous monitoring tools (Prometheus, Grafana) guarantee that the models provided remain precise and current by checking for data drift or deterioration in effectiveness.

- Reproducibility and Auditability: MLOps create a structure guaranteeing that models and experiments encounter reproducibility and auditability, which is needed in compliance and accountability in areas like healthcare and finance.

- Resource Allocation and Duplication: Automating scaling and resource allocation with a cloud environment helps lower operational costs, especially when high availability is needed.

With these practices integrated, MLOps allows businesses to transform faster while creating agile, dependable, and robust AI systems that ensure sustained value.

How Does MLOps Relate to DevOps?

MLOps and DevOps have differences, such as automation, collaboration, and constant delivery, but they serve different purposes. MLOps further expands software development and deployment processes with machine learning applications. MLOps controls data workflows, model training, monitoring, and classic software pipelines. Both aim towards the alleviation of work processes, the increase of productivity, and reliability. However, for MLOps, the challenge is integrating AI/ML models into production environments.

Comparing MLOps and DevOps Methodologies

In my opinion, both MLOps and DevOps seek to improve productivity and reliability in production processes, though both differ in their areas of interest and level of sophistication. DevOps revolves around the integration and automation of software development. At the same time, MLOps, which works on top of this base, focuses on machine learning systems’ distinctive requirements, such as complex data workflows, model retraining, and post-deployment monitoring and supervision of models.

Some of the more important features that set MLOps apart from DevOps are as follows:

- Data Management:

- In MLOps, special attention is paid to dataset versioning, dataset preprocessing, and validation, all of which directly impact model accuracy.

- This starkly contrasts DevOps’ more central concern – repositories and artifacts code management.

- Model Lifecycle Management:

- MLOps has CT workflows in which models are continuously retrained as new data becomes available. This is accomplished through pipeline automation and model hyperparameter tuning.

- In contrast, DevOps is chiefly concerned with CI/CD of standard software builds.

- Monitoring and Feedback Loops:

- MLOps enables model monitoring (drift detection, accuracy metrics), which ensures that models operate effectively in the actual operational environment.

- DevOps’ monitoring concentration is on application working uptime, performance, and error detection.

By adding layers to traditional DevOps to cater to AI/ML systems needs, MLOps facilitates effective management and control, both infrastructural and intelligent systems, solving issues particular to machine learning pipelines.

Integrating MLOps into Existing DevOps Practices

Incorporating MLOps into standard DevOps workflows requires applying traditional DevOps practices to machine learning systems. This involves adding tools and processes that deal with model training, deployment, and tracking as a software release. In this regard, we implement MLOps with automated pipelines for data cleansing, model versioning, and model evaluation. Also, we focus more on collaboration between data scientists, engineers, and operations staff to make everything work well together. This approach improves productivity and guarantees that machine learning models perform as expected.

What are the Key Components of MLOps?

The essential pillars of MLOps are:

- Data Management: A focus on proper data collection, storage, and preprocessing methods for training and deployment consistency and reliability.

- Model Development: Building machine learning models and tracking changes to them across their training and validation phases with version control for reproducibility.

- Automated Pipelines: Creating CI/CD pipelines automating model training, testing, and deployment processes.

- Monitoring and Maintenance: Relentlessly measure models' performance in production and look for ways to update them to ensure they’re correct.

- Collaboration and Communication: Working with data scientists, engineers, and operations personnel for efficient communication and workflow.

The Role of Pipeline in MLOps

In my view, MLOps cannot function efficiently without pipelines because they facilitate the execution and scalability of the various tasks that comprise machine learning workflows. Starting from steps such as data preprocessing, models are trained, and the machine learning operations are deployed and monitored automatically. With the integration of CI/CD concepts, iterations and updates are achieved quickly, ensuring that models work optimally. They also enable different teams to work together, help to quickly sort out problems, and control processes, which assist in machine learning operations, resulting in efficient and scalable solutions.

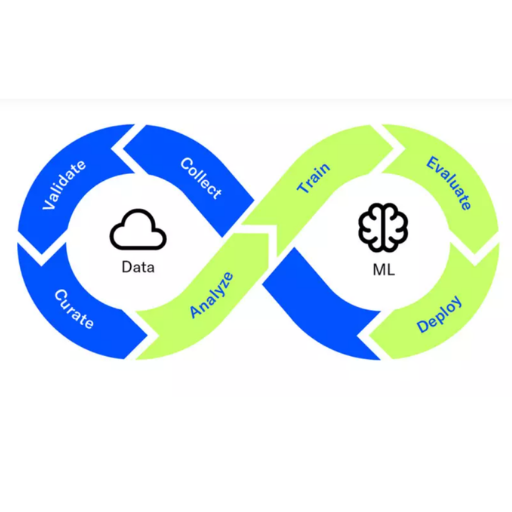

Managing the Machine Learning Lifecycle

A smooth execution of the machine learning life cycle requires working in stages that include precise data collection, model deployment, and monitoring. I always emphasized efficiency and consistency in the processes. To start with, I emphasize the collection and preprocessing of relevant data to ensure that there is enough data ready for the model training. In addition, I provide good organization and reproducibility by applying version control and experiment tracking tools. For model training, automated pipelines are essential to make every workflow as efficient as possible, and this is combined with other CI/CD concepts. Lastly, I ensure burdensome monitoring frameworks to deploy models to tackle data drift and performance deterioration. This approach enables me to be flexible, collaborate easily, and deliver reliable, versatile results.

Ensuring Model Performance and Validation

To ensure a model is performing correctly, I apply an extensive cross-validation process for accuracy checking and generalization. I monitor core business indicators such as accuracy, coverage, F1 score, and AUC-ROC to measure efficiency from different perspectives. I use operational test datasets and conduct backtesting to emulate production environment scenarios. Around-the-clock monitoring using automated alerts also assists in discovering problems owing to issues like data drift or performance erosion, which makes it impossible to meet objectives on time and refresh the model.

How to Implement MLOps Best Practices?

To successfully execute MLOps best practices, begin with transparent end-to-end workflow processes that include data collection and model deployment. There should also be automation of processes such as data cleansing, model training, and other evaluation processes using pipelines for easy reproducibility and scalability. Maintain code and data versioning for consistency and the ability to track changes. Take advantage of CI pipelines for ease of model updates and dissemination. Using accuracy, latency, and data drift metrics, issue engagement can be automated for faster resolution. Lastly, the data science, engineering, and domain expert teams should improve cooperation to achieve set goals.

Steps to Deploy and Maintain Machine Learning Models

- Preparation and Cleaning of Data

- Work to achieve a combination of high-quality and diverse training data. Employ data cleaning, normalization, and augmentation techniques to improve model performance.

- Maintain a split ratio of at least 80% for training and no more than 10% for validation and testing datasets, or a 70-15-15 split as an alternate.

- Development of Models and Their Version Control

- For model development, use Tensorflow, Pytorch or Scikit-learn frameworks.

- To help control versioning for experiments and models, use either Git or DVC tools to assist with model and data version changes.

- Training of Models

- Employ grid search, random search, or Bayesian optimization to tune hyperparameters.

- Where applicable, use GPUs or TPUs for heavy computational work.

- Testing and Validation of the Model

- Test models on the validation datasets for accuracy, precision, recall, F1, or MAPE, depending on the case.

- Test rigorously to check the level of generalization and reliability with unexperienced scenarios.

- Containerization and Packaging.

- To ensure consistency in different environments, use Docker to package models.

- Also, include containers with the runtime dependencies and configurations.

- Deployment Methodology

- Depending on the application, choose a deployment method, including batch inferencing, real-time inferencing, and edge deployments.

- API frameworks such as FastAPI and Flask are used to serve model predictions.

- Deploy your model at scale using cloud platforms like AWS SageMaker, Google AI Platform, or Azure ML.

- Monitoring and Maintenance

- Apply monitoring solutions to log performance metrics such as latency, prediction accuracy, data drift, and resource usage.

- Build automated retraining pipelines to preserve accuracy over time by incorporating new data.

- Set up thresholds and alerts using monitoring tools like Prometheus or Grafana to respond effectively to performance outliers.

- Security and Compliance

- Protect data and models using encryption, access controls, and secure APIs to mitigate risk.

- If sensitive or user-specific data is involved, ensure compliance with regulations such as GDPR, CCPA, or HIPAA during the deployment.

- Continuous Improvement

- Track issues and integrate them into the next update iteration.

- Conduct A/B testing on updated models to confirm improvements are made without degrading performance.

By thoroughly implementing these recommendations and adjusting specific parameters, any project can achieve effective deployment and maintenance of model performance over time.

Automation and Governance in MLOps

As with any sophisticated system such as MLOps, automation, and governance components exist. For MLOps, my priority is compliance and accountability, which is synonymous with governance. Automation reduces manual tasks through integration with CI/CD pipelines for model training, testing, and deployment, which decreases errors while increasing the speed of iterations. Governance defines what a model needs to accomplish, including security, ethics, and compliance. I implement effective governance through models, audit trails, data lineage, and version control of models and datasets. These elements combine premade robust infrastructure with automation and governance, allowing for scalable and transparent machine learning operations governance.

Ensuring Effective Model Training and Retraining

Following well-defined and relevant best practices is the only way to maintain effective model training and retraining. First, mitigating bias and improving generalization through thorough and diverse datasets is crucial. Multidimensional monitoring of models using precision, accuracy, recall, and other indicators should be done regularly to identify areas that need improvement.

Specify a strong pipeline for retraining in the case of concept drift or any pattern change. Ensure that you continuously refresh the training data with new trends and real-world modifications to increase the model's accuracy in production environments. While automation tools can ease the burden of the retraining process, governance can ensure that ethical and legal standards are met when updates are made.

Double-check and confirm that the outcome is achieved through thorough testing before and after the retraining stage. Cross-validation A/B testing is a perfect tool for verifying that updates are executed and performance levels are enhanced without any unwanted errors. Regular validation and monitoring, coupled with consistent retraining, will ensure long-term ease in MLOps tasks and success in operations.

What are the Benefits of MLOps for Data Scientists?

MLOps puts forth a flexible structure for data scientists and enhances their productivity by automating repetitive tasks such as model deployment and data preprocessing. Consequently, data scientists can devise new solutions since their need for manual intervention is lowered. It also improves collaboration among divisions as teams are provided with unified processes and tools that help smooth the transition from development to production. In addition, there is enhanced model reliability and reduced errors, leading to more precise and robust results being generated. This is made possible through consistent validation and monitoring of previously deployed models. All in all, MLOps improves efficiency and scalability while ensuring a more advanced experience of the machine learning lifecycle.

Enhancing Workflow Efficiency with MLOps

Machine Learning Operations, or MLOps, enhances the efficiency of workflows by facilitating the machine learning lifecycle, including development, deployment, and maintenance. MLOps resolves creeping issues such as collaboration silos or model drift, enabling teams to achieve consistent and scalable results. The following is a summary of the practical advantages :

- Streamlined Collaboration:

MLOps enhances productivity by filling the gap between data science and engineering teams with well-defined processes and tools, thus improving communication and integration across teams during the production pipeline.

- Accelerated Deployment:

The automation of mundane activities ensures a faster deployment turnaround. CI/CD (Continuous Integration/Continuous Deployments) pipelines allow for needed iterations, ensuring market speed.

- Improved Model Reliability:

Integrating Multi-Operational Processing Frameworks enables real-time monitoring, anomaly detection, and validation processes, guaranteeing MLOP accuracy over time and not suffering from model drift, which is the slow degradation of a system's performance.

- Scalability:

MLOPS-enabled architectures like Kubernetes or AWS Sage Maker allow the models to perform scaling within cloud or hybrid environments, making them accessible to large data sets.

Technical Parameters:

- Version Control: Utilizing Git-based repositories for data, model, and code versioning (e.g., DVC, Git).

- Automation Tools: Automated deployment is achieved using CI/CD tools like Jenkins and GitLab.

- Monitoring Metrics:

- Latency (<100ms for real-time predictions).

- Drift detection thresholds: For example, a statistical method detects a 5% to 10% change in data distribution.

- Infrastructure Resources:

- CPU/GPU Utilization: <75% is preferred for optimized runtime.

- Kubernetes pods for resource scaling and fault tolerance.

MLOps practices improve organizations’ workflow efficiency while maintaining robust performance and adaptability to evolving needs.

The Impact of MLOps on Data Science Projects

The structure provided by MLOps has transformed the approach to data science because it enables collaboration, scalability, and reliability throughout the machine learning life cycle. In my opinion, MLOps optimizes the monitoring and deployment of models, improving system uptime. Essential factors to evaluate are the model's retraining periods, which need to be matched to data drift checks (such as once every month or upon a 5% drift), and the infrastructure level metric, which in this case will be 70% CPU or GPU usage being the sweet spot. In addition, these systems have benefitted from automation tools designed for deployment, like CI/CD pipelines or Kubernetes. Not only do these improve deployment, but they also enable the system to be more resilient. The additional benefits include faster delivery in response to project requests and improved adaptability of models over time to provide consistent performance.

How Do MLOps Platforms Support Machine Learning Operations?

MLOps platforms consolidate machine learning operations by automating the entire lifecycle process, including data collection, analysis, model training, deployment, and monitoring of the ML system. They provide automated processes, version control for datasets and models, and continuous integration and deployment (CI/CD) tools. These platforms foster collaboration between data scientists and engineers, as well as business teams while maintaining scalability and reproducibility. In addition, these platforms monitor model performance and drift, allowing for precision and reliability interventions.

Features of a Robust MLOps Platform

An MLOps platform should provide comprehensive automation of the ML workflow, seamlessly spanning from data collection and analysis to model deployment. It must include versioning control to capture changing datasets and model revisions to guarantee reproducibility. Also, the platform should facilitate the CI/CD pipeline integration for easy model updates and deployment. The platform should also be scalable to accommodate dataset growth and model complexity. Monitoring is another option, letting users supervise model performance, drift, and preemptive adjustments. Powerful collaboration features are essential to direct the collective efforts of data science, engineering, and business departments.

Choosing the Right MLOps Platform for Your Needs

This section will cover how I select an MLOps platform for my organization. Scalability, automation, and collaboration are the three areas of focus that I take into consideration. It should facilitate the implementation of CI/CD pipelines for effortless deployments; complex and large datasets need to be supported, and there has to be sufficient real-time monitoring to guarantee that models are hitting their expected performance. Additionally, I put more importance on the platforms that foster collaborative relationships between the data science, engineering, and business strategy teams so that strategies and operational workflows can be integrated more efficiently. A strong candidate is given these requirements whilst also needing to work with the existing technology and offering some degree of freedom as my needs shift.

References

- MLOps Definition and Benefits - Databricks

- MLOps: What It Is, Why It Matters, and How to Implement It - Neptune.ai

- Overview of MLOps - Medium

Frequently Asked Questions (FAQ)

Q: MLOps, and why is it essential in machine learning?

A: MLOps stands for Machine Learning Operations, a set of practices that aim to reliably and efficiently deploy and maintain machine learning models in production. It is crucial because it bridges the gap between data scientists and operations teams, ensuring continuous delivery and integration of machine learning projects.

Q: How does MLOps enable the deployment of ML models?

A: MLOps enables the deployment of ML models by automating workflows and integrating them into the software development lifecycle. This includes automating feature engineering, model development, testing, and deployment, thereby streamlining the process of getting machine learning models into production.

Q: What role does continuous delivery play in MLOps?

A: Continuous delivery in MLOps ensures that machine learning models are always ready for production deployment. By automating the deployment process, MLOps allows for more frequent updates to models, incorporating new data and improving their accuracy and performance over time.

Q: How do operations teams benefit from using MLOps?

A: Operations teams benefit from MLOps by gaining a structured framework to manage machine learning systems. This includes monitoring models in production, ensuring they perform optimally, and facilitating collaboration with data scientists and machine learning engineers to improve and scale operations.

Q: What are some common challenges MLOps addresses in machine learning projects?

A: MLOps addresses several challenges, including managing the complexity of deploying machine learning models, ensuring reproducibility of results, automating workflows, and maintaining the performance of ML systems over time. It also provides tools for monitoring and updating models based on new data.

Q: How does MLOps integrate with existing software engineering practices?

A: MLOps integrates with existing software engineering practices by incorporating principles of continuous integration and continuous delivery (CI/CD) specific to machine learning. It ensures that ML models are treated as integral parts of the software development process, thus aligning with broader business objectives.

Q: What is the significance of automating feature engineering in MLOps?

A: Automating feature engineering in MLOps is significant because it reduces the time and effort required to prepare datasets for model training. By automating this step, data scientists can focus on developing better models while ensuring consistency and reliability in the data used for training.

Q: Why do machine learning engineers need MLOps practices?

A: Machine learning engineers need MLOps practices to manage the lifecycle of machine learning models efficiently. MLOps provides them with tools and methodologies to develop, deploy, and monitor models, ensuring they are scalable, reliable, and aligned with business needs.

Q: How does MLOps handle new data in machine learning systems?

A: MLOps handles new data by incorporating mechanisms for continuous integration and deployment. This enables models to be retrained and updated as new data becomes available, helping maintain the accuracy and relevance of machine learning models over time.