Discover the Best MLOps Tools for 2025: A Comprehensive Guide to End-to-End Machine Learning Platforms

Every machine learning model requires powerful tools and workflows for its construction, deployment, and maintenance, especially at scale. This is the gap MLOps aims to fill – the combination of machine learning, development operations, and data engineering, where MLOps covers the entire lifecycle of machine learning from building to operating it.

This guide post describes the main features, advantages, and integration of the leading MLOps tools expected to emerge in 2025 within an MLOps ecosystem. It will elaborate on the model development, versioning, deployment, monitoring, and scaling process and the essential tools required at each stage. By the end of this article, readers will appreciate the value chains created by these tools, how they aid in optimizing collaboration workflows, and the overall productivity of machine learning endeavors.

What Are They and Why Are They Important?

These tools are pivotal to the machine learning ecosystem because they optimize, facilitate, and automate work for various parts of the ML workflow. They help with efficient model building, systematic versioning, and easy deployment and supervision. Using these tools helps alleviate operational burdens, enhance reproducibility, and shift attention to making impactful and scalable solutions. Enhanced collaboration, faster project turnaround, and potent utilization of machine learning make these tools valuable.

Understanding Operations

Getting to terms with operations is about identifying the foundational processes that guarantee efficiency in machine learning workflows. In this case, operations refer to machine learning model management, deploying, and monitoring activities. I think providing tools and frameworks that automate the succession of worked processes, preserve model integrity, and guarantee robustness is essential. Thus, I can create scalable models with minimum errors and operational heavily layered complexities. Furthermore, strong teamwork and adequate monitoring go a long way in ensuring that every development and deployment phase is optimized for efficiency without compromising on speed.

The Role of AI in Modern Development

The application of artificial intelligence (AI) at every stage of development and in every field is effortless and comprehensive. Some frameworks, such as TensorFlow, PyTorch, and Scikit-learn, facilitate low-level task automation. Machine learning algorithms like random forests, support vector machines, and neural networks will help developers build well-performing, accurate models.

Important Technical Specifications:

- Model Accuracy: For production models, set a target accuracy of at least 90% based on the associated use case.

- Training Set Size: Improve generalization with large datasets of diverse sizes (e.g., thousands to millions of samples).

- Latency: Optimize real-time applications for end-to-end latency of less than 100 milliseconds.

- Resource Usage: Ensure models are sufficiently optimized for memory and processing power, especially in edge AI applications.

- Monitoring: Set up monitoring systems such as MLflow or Prometheus to gather performance metrics after deployment.

Seeking and achieving fulfillment in these parameters will serve toward an incremental development approach to modern AI while revolutionizing the business landscape.

How to Enhance the Performance of AI Models

Several approaches can be followed to improve the output performance of AI models as guided by academic studies and industry practices:

- Data Quality and Preprocessing: Employ high-quality data and appropriate preprocessing. Data cleansing, normalization, and augmentation are all preconditions that significantly improve a model's performance.

- Model Optimization:

- Implement quantization and pruning for model reduction while maintaining acceptable performance levels.

- Use pre-trained models while exploring learning to minimize time and resource expenditures.

- Hyperparameter tuning using Optuna or Hyperopt can provide a near-optimal solution for learning rate, batch size, and number of layers.

- Infrastructure and Deployment:

- Spend on high-quality hardware, such as GPUs or TPUs, and edge-optimized processors, like NVIDIA Jetson NX, where low latency is needed.

- Apply model parallelism or distributed model training for larger datasets or when working with more complex models.

- Adopt containerization with tools like Docker and infrastructure orchestration with AI service management using Kubernetes for scalable AI services.

- Efficient Algorithms and Frameworks:

- Deploy edge devices with frameworks optimized for efficiency, such as Tensorflow Lite or Pytorch mobile.

- Take specific purpose tailored algorithms designed for object detection such as YOLO or BERT for natural language processing.

- Real-time Performance Monitoring:

- Measure on-the-fly performance metrics such as latency, throughput, and accuracy using MLflow or Prometheus for monitoring systems.

- Create automated systems of alerts and dashboards to monitor any significant shifts in model activity.

In conjunction with ongoing R&D, these practices will allow AI models to achieve exceptional performance efficiency in real-world applications.

How to Choose the Best for Your Needs?

It is best to evaluate priorities such as performance, scalability, and ease of integration while selecting a solution. Start by defining your project goals and constraints, such as data, computing resources, and workload limits. Examine your candidate models and tools regarding accuracy, metrics, operational latency, and value for cost. Ensure that your selection will be practical in terms of maintenance and incorporation of newer technologies in the long term. The decision-making comes down to finding the appropriate fit for your goals and the strength of existing solutions in your available options for selection.

Identifying Key Features of

Assessing how well the features of a solution grasp a given problem, I first evaluate how well it meets and serves the specific needs of my project. When analyzing a solution, it is essential to consider reliability, scalability, and performance. Equally important, in my view, is the practicality of a tool or interface from a user-centered design perspective, as it directly affects implementation and adoption into my workflow. Cost is another hurdle that must be managed so that the value received justifies the investment. Finally, I assess how the problem will be dealt with with system integration and how flexible the problem solution will be regarding new developments for future use.

Evaluating for Performance, Scalability, and Usability

In determining the effectiveness of a solution, these essential parameters that need analysis include:

- Performance:

- Response Time: The response time must be a few milliseconds for real-time applications and a few seconds for regular operations.

- Throughput: Determine the rate of execution of processes within a second to ensure optimal management of high workloads.

- Error Rate: A low error rate, less than 0.1%, is vital for accuracy and reliability.

- Scalability:

- Horizontal Scaling: The possibility of including additional machines or nodes in a system to satisfy a more significant workload.

- Vertical Scaling is the possibility of upgrading hardware capabilities, such as increasing the CPU and RAM, while ensuring the system functions correctly.

- Load Balancing: The even allocation of incoming and outgoing traffic over the servers to ensure smooth efficiency instead of bottlenecking pace.

- Usability:

- User Interface (UI): Users new to the system should have minimum learning curves due to the intuitive and accessible design.

- Cross-Platform Integration: The implemented solution must cover various devices and operating systems.

- Integration Capabilities: For seamless incorporation, existing systems must be supported with APIs or plugins.

The evaluation of these parameters improves the chances of identifying a solution that meets current needs while aiding in a directed focus on pursuing strategic growth options.

Comparing Open-Source vs. Commercial Solutions

The balance between commercial and open-source solutions rests on cost, flexibility, and the degree of support needed. Open-source solutions are often inexpensive and allow users a high degree of customization; however, unsupervised software maintenance usually requires expensive technical talent. Commercial solutions cost more but provide a seamless user experience, integrated customer support, and polished interfaces. Commercial tools are best in terms of usability and dependability, which sets them apart from open-source tools with greater versatility and scope for improvement from the community. The organization’s needs and long-term goals should always be present when deciding.

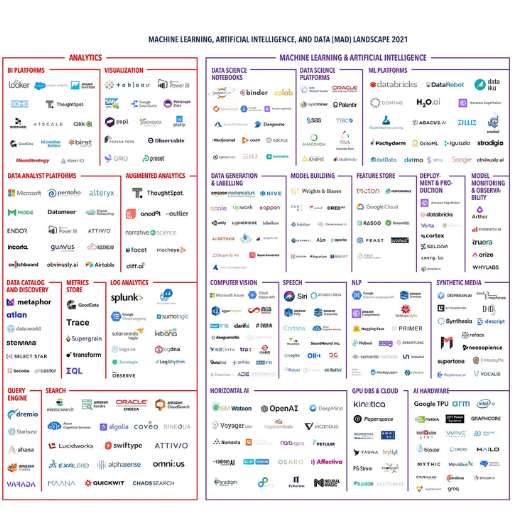

What Are the Top for in 2025?

In 2025, a greater focus is expected on automated systems, self-learning[an] tools and technologies, and the cloud. AI will dominate all fields, setting new standards of insightful predictive analysis and ease of decision-making for human users. Non-AI-driven solutions will direct the focus of organizations toward making use of the actual value hidden inside the data. RPA and other software automation solutions will improve the efficiency and effectiveness of business processes. The need for advanced cloud infrastructure and cyber forensics will continue in the expanding digital marketplace. Businesses must follow market developments and planned objectives to incorporate the most valuable and relevant tools into their workflows and strategies.

An Overview of Leading Technology Trends

I believe the most active technology trends today are centered around AI, automation, and the cloud. AI is advancing analytics and making other decision-making more efficient across various sectors. Automation technologies like Robotic Process Automation (RPA) are essential for companies looking to improve business processes and increase productivity while lowering costs. At the same time, organizations use cloud services to manage their infrastructure and securely meet changing digital business needs. These technologies should be prioritized to be competitive and respond to the demands of a data-centric world.

Key Features of Automation and Cloud-Based Services

- Enhanced Efficiency

Using automation tools such as Robotic Process Automation (RPA) increases operational speed while improving accuracy because repetitive tasks are completed without human interference. For instance, RPA systems accomplish high-volume data entry tasks in a minimal timeframe compared to manual methods.

- Scalability

Services on the cloud can easily increase or decrease the amount of resources allocated to them depending on the organization's requirements. Technologies such as virtual machine clustering and elastic storage capacities ensure businesses can easily accommodate fluctuating workloads.

- Cost Optimization

Reduced manual labor created through automation and the pay-as-you-go option offered by RPA accounts for significant savings for the client company. This is most notable through the lowered Total Cost of Ownership (TCO) expenditure on Maintenance of Infrastructure and IT systems.

- Data Security And Compliance

Sensitive data protection is readily encapsulated in multi-factor authentication and advanced encryption, which cloud solutions often incorporate, along with compliance with GDPR and HIPAA regulations.

- Improved Decision-Making

The use of automation and cloud-based solutions expands the analytics functionalities. Automation guarantees proper data collection to ensure accuracy, while powerful computational capabilities for real-time analytics and AI/ML insights are available on the cloud platform.

- Accessibility And Collaboration

Cloud-based platforms offer tools that facilitate collaboration and allow remote accessibility. Integrated project management systems and shared databases enhance efficiency in remote work environments.

Integrating such details enables businesses to maximize the benefits of technology, automation, and cloud solutions while guaranteeing competitiveness.

Case Studies of Successful Automation and Cloud Integration

- Netflix - Scaling Made Simple and AI Integration

Netflix employs cloud computing for its endless global streaming services. It leverages AWS for its cloud computing, enabling seamless scalability to channel influxes and declines of viewers. Moreover, Netflix uses automation to improve user experiences and enhance its AI recommendation system, which monitors users in real time to offer suggestions based on their behavior.

- Airbnb - Save time and Enhance Reliability

Airbnb has migrated the infrastructure to Google Cloud because of its increased reliability and performance. The platform opts for automated and cloud-based tools to manage millions of listings and transactions daily. Automated monitoring and fault detection maintain a consistent user experience while enabling rapid system problem-solving.

- Coca-Cola - Efficient Supply Chain Management

Coca-Cola moved to Microsoft Azure to Improve data accessibility within global teams and supply chain operations. Coca-Cola incorporated automation into its cloud systems to eliminate manual data population, which enabled the company to track production and inventory in real time. This improved operational efficiency alongside timely decision-making.

These cases demonstrate cloud technology and automation's unique disruptive impact on innovation, operational effectiveness, and sustaining competitiveness in various industries.

How Do Platforms Improve?

To enhance operational efficiency, a platform must leverage automation and foster collaboration while ensuring stringent security measures are in place. Fostered collaboration, strict security, and automated processes lead to improved operational efficiency using AI technologies. Increased productivity, engaging user interfaces, and the adoption of advanced technologies such as big data analytics drive the improvement of the platform. These user feedback loops ensure the platform is scalable and can adapt to changes to meet user expectations.

Streamlining the Process

I focus on user experience design and adopting advanced technologies to enhance the process. Automation and machine learning technologies are integrated to simplify work processes and enhance efficiency. Feedback is captured and analyzed to help improve security measures while enabling strong security protocols. There are several users in the market, and collaboration from all users is essential to ensure that solutions are innovative around market needs. This method helps ensure that an enhanced and optimized platform experience is provided.

Enhancing Collaboration

Improving team collaboration and interfacing with other departments entails using appropriate tools, methods, and communication approaches. Here are some helpful tactics emerging from research by premier specialists:

- Use collaborative software: Slack and Microsoft Teams enable chatting, file transfer, and swapping. Their use enhances workflow since team members can remain productive and busy.

- Establish routine communication processes: Develop processes that guarantee that crucial information is communicated promptly. For instance, daily stand-ups or weekly meetings ensure everybody is talking the same language and working towards achieving the same ends.

- Cultivate a collaboration-enhancing environment: Construct an inclusive environment that enables everybody to converse and share ideas. This can be accomplished through rewarding given input and encouraging and creating equal chances for the team to vote.

- Achieve set targets and assess the outcome:

- Completion rates of tasks assigned to the team, response speed to questions, and customer satisfaction are essential KPI indicators.

- Tools such as Jira or Monday can be used to visualize the progress of marking predefined milestones.

- Use of effective technical parameters as yardsticks of achievement:

- Communication with video conferencing tools requires a minimum of 100 Mbps internet speed.

- For easy sharing, use encrypted Google Drive or OneDrive with a minimum storage space of 1TB.

- Protection Methods: Use multi-factor authentication and end-to-end encryption to secure collaboration data requiring sensitive handling.

Teams can achieve more beneficial results using these approaches and appropriate technology to enhance collaboration.

Automating

Automation is primarily focused on making processes more efficient by eliminating unnecessary repetitions. In my experience, incorporating workflow automation software, voice recognition assistants, or project management tools significantly reduces manual input. A great example is the automation of email responses, data entry, and scheduling, which can easily save work hours. By identifying repetitive processes in your workflow and using applicable tools, you can increase productivity and enable team members to concentrate on more tactically important work.

What Are the Benefits of Using MLOps?

MLOps transformations improve machine learning models' efficiency, scalability, and reliability. MLOps automates workflows and integrates many processes such as development, validation, and deployment, saving time and effort in model management. Other benefits include enhanced inter-team collaboration, reproducibility of models, and reduced risk exposure within production environments. Moreover, MLOps aids in efficiently utilizing resources, allowing businesses to scale their AI initiatives while preserving the performance and accuracy of the models.

Cost-Effectiveness of Solutions

In my opinion, automating repetitive processes leads to significant manual effort and operational cost savings. This makes automation a key pillar of cost-effectiveness in MLOps solutions. Moreover, purchasing increasingly sophisticated infrastructure and tools, like cloud-based platforms, supports minimized initial cost expenditure and increased expenditure adaptability with evolving needs. In addition, efficient team collaboration, which minimizes resource redundancy, simplifies workflows, and streamlines resource use, results in cost savings over prolonged periods. When businesses focus on these strategies, they stand to gain the most from their investments in machine learning while still keeping performance and accuracy levels high.

Community Support and Engagement

Fostering an open exchange of ideas with a community of active developers, researchers, and practitioners is vital for successfully implementing machine learning projects. Involvement with open-source communities provides new opportunities for knowledge and problem-solving and keeps users informed about the current changes. Active citizen participation ensures effective solutions are provided and fosters innovation and development productivity. Some of the primary considerations for the involvement in community activities are:

- Documentation Quality—To foster engagement and collaboration, Appropriate instructions regarding the tools, models, and activities provided must be provided.

- Open Communication Channels—Discussion threads, forums, and reports on GitHub and Slack can facilitate easy interactions.

- Timely Updates—Solutions must evolve with modern technologies, so regular changes based on community input are necessary.

- Knowledge Sharing—Diverse perspectives' contributions through blogs, tutorials, and webinars should be welcomed and used to share knowledge.

The community organizations can boost their machine learning activities by capitalizing on the resources of a collaborative community and institute a cycle of development and growth that multiplies with time.

Flexibility in Machine Learning Design

The flexibility in the design of machine learning systems comes from technology’s adaptability. My work revolves around building modular and scalable systems that can grow with additional data and use cases. I use open-source tools and feedback from several communities to ensure solutions are agile, relevant, and robust. Such practices improve creativity and help organizations continuously improve in a strong technological environment.

What Challenges Do Face with?

One of the primary challenges is addressing the complexity of rapid technological change, including the need for scalable and flexible solutions. It is sometimes difficult to merge innovation with practical execution, particularly when multidisciplinary teams and their differing inputs must be integrated. Besides, keeping pace and ensuring systems compatibility with the rest of the tools makes one a perennial student.

Overcoming Hurdles

Working with people from different regions requires facilitating free-flowing and unified communications around project objectives, something I do with the help of agile methodologies. I depend on extensive architecture testing and design, including cloud infrastructure solutions, to resolve functional limitations and enhance system expansion.

Considered Technical Aspects.

- Growth Potential—Employ scalable cloud services, such as AWS and Azure, which offer increased storage capacity elastically.

- Integration – Integrate existing systems with modern solutions by performing extensive compatibility checks during the integration phases.

- Flexibility—Use Agile, Scrum, or other frameworks to allow frequent changes throughout the project's lifecycle.

Ensuring Scalability and Reliability with Cloud Solutions

I use cloud services such as AWS, Azure, or Google Cloud for elastic scaling, as they provide comprehensive features. Defining a monitoring approach with CloudWatch or Azure Monitor helps mitigate performance issues. I focus first on implementing a microservices architecture, which allows for agile scaling of individual components without affecting the whole system. Next, I multi-region deploy to ensure redundancy and add automatic failover systems to maintain uptime and reliability during unforeseen events.

Addressing and Iterations

- How do you guarantee the optimization and scalability of the system?

To maximize system optimization and scalability, I incorporate services via microservices architecture, which enables me to scale individual components more rapidly. Performance issues can be detected early using performance monitoring tools such as CloudWatch and Azure Monitor. At the same time, stability and uptime are ensured through multi-region deployments and redundancy features like automatic failover systems.

- What do you do for reliability and fault tolerance?

My approaches to ensuring reliability include using multi-region deployments, which enable workload distribution to minimize the risks of a regional outage. Robust automatic failover mechanisms handle continuity during unexpected disruptions, while capable monitoring allows quick identification and proper resolution of suspected bottlenecks.

- What do you do to manage and monitor potential issues proactively?

Proactive management includes using automated monitoring tools for real-time monitoring, setting alerts for breaches of predefined threshold levels, and periodic system health checks. This approach allows for the proactive handling of anomalies that have a minimum effect on System performance and user experience.

References

- 27 MLOps Tools for 2025: Key Features & Benefits - A detailed overview of top MLOps tools for model creation, deployment, and monitoring.

- What are some really good and widely used MLOps tools - A Reddit discussion on popular MLOps tools like MLFlow, DVC, and Kubernetes.

- 10 Best MLOps Platforms of 2025 - Insights into platforms like Amazon SageMaker and their features.

Frequently Asked Questions (FAQ)

Q: What are MLOps tools, and why are they essential for machine learning projects in 2025?

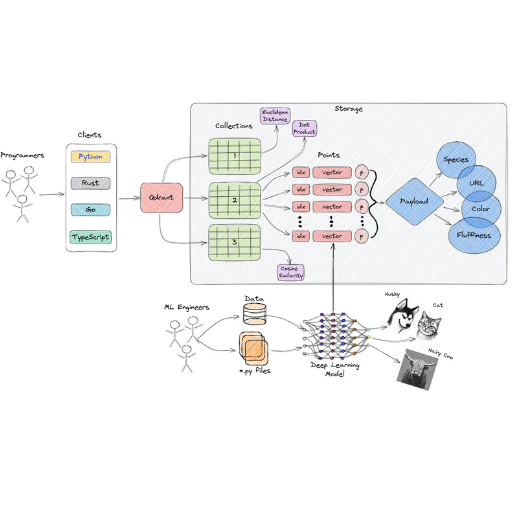

A: MLOps tools are software solutions that facilitate developing, deploying, and managing machine learning models. They are essential for machine learning projects as they streamline the workflow, automate the lifecycle, and improve collaboration between data scientists and machine learning teams. They ensure efficient ML pipeline management and enhance machine learning model deployment.

Q: How do MLOps platforms support the end-to-end machine learning lifecycle?

A: MLOps platforms support the end-to-end machine learning lifecycle by providing a comprehensive suite of tools and platforms that cover everything from data ingestion and model training to deployment and monitoring. These platforms integrate various machine learning libraries and learning frameworks, enabling seamless transitions between different stages of the ML pipeline and facilitating continuous integration and delivery (CI/CD) of machine learning models.

Q: What are the benefits of using open-source tools in MLOps?

A: Open-source tools in MLOps offer several benefits, including cost-effectiveness, flexibility, and community support. They allow data scientists to customize and extend tools to meet specific project needs, provide access to a large pool of shared knowledge and resources, and enable rapid innovation and collaboration in the data science and machine learning community.

Q: How do serving tools contribute to deploying machine learning models?

A: Serving tools are essential for deploying machine learning models. They provide the infrastructure required to offer ML models as scalable and reliable services. These tools facilitate the real-time serving of predictions, manage load balancing, and ensure that the models are accessible to applications and users, closing the loop in the machine learning operations process.

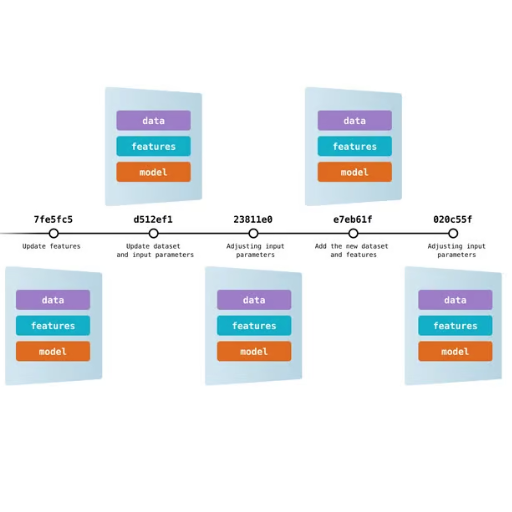

Q: What role does model metadata management play in MLOps?

A: Model metadata management tools play a crucial role in MLOps by tracking and storing metadata related to machine learning experiments, such as model parameters, training data, and version history. This information is vital for reproducibility, auditing, and collaboration, enabling machine learning teams to manage machine learning projects more effectively and ensure compliance with industry standards and regulations.

Q: How can monitoring tools improve the performance of deployed machine learning models?

A: Monitoring tools improve the performance of deployed machine learning models by continuously tracking metrics such as model accuracy, latency, and resource utilization. They provide alerts for anomalies and drift in data or model performance, allowing data scientists to take corrective actions promptly. This ensures that the models maintain high performance and reliability throughout their lifecycle.

Q: What are the top MLOps tools to consider for managing machine learning projects in 2025?

A: Some of the top MLOps tools to consider in 2025 include Iguazio MLOps Platform, which offers a comprehensive MLOps solution, as well as open-source tools like MLflow for experiment tracking, Kubeflow for orchestration of ML workflows, and TensorFlow Extended (TFX) for ML pipelines. These tools and platforms provide robust capabilities for managing end-to-end machine learning workflows efficiently.

Q: How do MLOps practices enhance collaboration among machine learning teams?

A: MLOps practices enhance collaboration among machine learning teams by establishing standardized processes and tools that facilitate communication and coordination. By leveraging shared infrastructure, version control, and project management tools, teams can work more cohesively, reduce silos, and streamline the development and deployment of machine learning models.

Q: What are the key features to look for in an end-to-end MLOps platform?

A: Key features to look for in an end-to-end MLOps platform include support for diverse data sources, integration with popular machine learning libraries, robust pipeline orchestration capabilities, automated model deployment and monitoring, and tools for model metadata management. A comprehensive MLOps platform should also offer scalability, security, and compliance features to meet enterprise-level requirements.

Q: How do end-to-end MLOps platforms streamline the ML pipeline?

A: End-to-end MLOps platforms streamline the ML pipeline by providing an integrated environment where all stages of the machine learning lifecycle are connected and automated. This reduces manual intervention, minimizes errors, and accelerates the time from model development to production deployment, ultimately enabling faster and more efficient machine learning operations.