Discover the Best MLOps Platform for Your AI Projects

Selecting an MLOps platform is essential for AI model development, as it can make or break the success of the project. This post discusses what MLOps capabilities are essential to make the platform effective and how the right MLOps tools can help manage project workflows. From development, deployment, and monitoring to scaling a model, we will provide an evaluative structure to help you choose the best possible platform to suit your needs. Whatever your role, be it a data scientist, engineer, or decision maker, this guide will help improve synergy and effectiveness in your AI processes.

What Is an MLOps Platform and Why Do You Need It?

An MLOps platform is a system developed to assist in the development, deployment, and monitoring of machine learning (ML) projects, having a centralized hub. It automates data processing, model training, versioning, and monitoring, thereby streamlining workflows. The goal of an MLOps platform is to optimize the coupling of data scientists, engineers, and operations without losing scalability, reproducibility, and efficiency. With an MLOps platform, organizations can achieve faster time-to-market, better model accuracy, and consistency across AI projects, resulting in improved business performance.

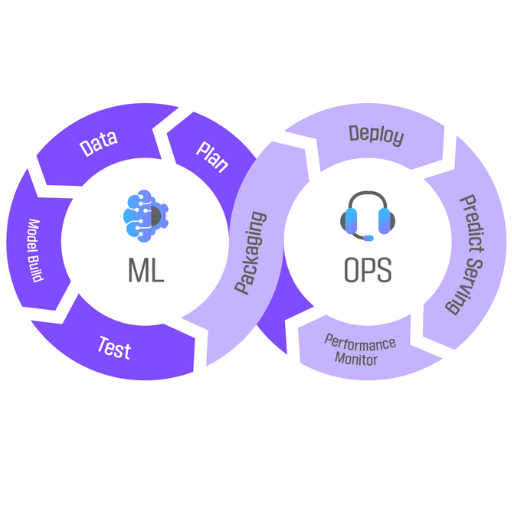

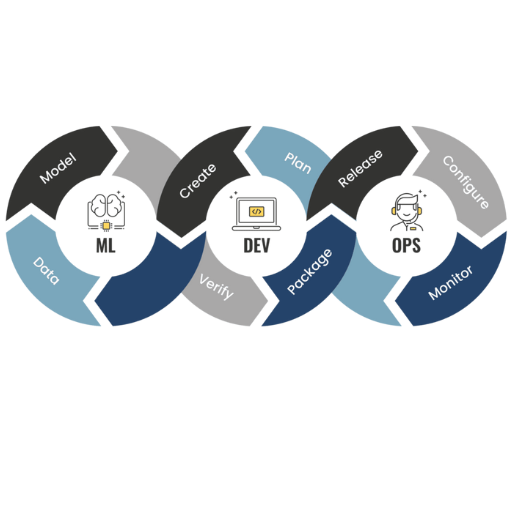

Understanding the Role of MLOps in ML Projects

From what I know, MLOps ensures efficient and reliable workflows by enhancing the productivity of machine learning processes and operations. It connects data scientists, engineers, and operations teams with the necessary business practices and tools for model versioning, automated deployment, and monitoring. MLOps enables organizations to streamline their AI strategies. With MLOps, I can enhance collaboration, shorten deployment timelines, and control model performance, which helps to innovate and make decisions more effectively and quickly.

Benefits of Using an MLOps Platform for AI

An MLOps Platform integrates and automates the operational processes of machine learning systems while seamlessly improving upon them. Here are the primary advantages and relevant propositions:

- Enhanced Collaboration

- Provides a single point of access to workflows and tools for engineers, data scientists, and other project stakeholders for streamlined cooperation.

- Technical Parameter: Integration of version control software (e.g., Git) for auditing modifications in models and pipeline datasets.

- Streamlined Model Deployment

- Permits the rapid and semi-automatic shift of machine learning models to production environments.

- Technical Parameter. CI/CD services are designed to support ML processes for continuous testing and deployment.

- Scalability

- Permits degradation-free performance when bearing increased operational or data-related loads.

- Technical Parameter: Support for scalable cloud infrastructure integration, for example, Kubernetes, AWS, Azure, with resource elasticity.

- Monitoring and Maintenance

- Ensures model dependability by monitoring its accuracy and drift thresholds over time.

- Technical Parameter. Model performance monitoring with graphs and alerts for abnormal behavior – Prometheus, Grafana.

- Reproducibility

- Guarantees the reproducibility of experiments and exercises performed for model training for transparency.

- Technical Parameter. Auto-logging of data, code, and hyperparameters guarantees workflow reproducibility.

With these features, any organization will always be ready to increase the scale of their AI activity without losing efficiency or reliability of the models.

Key Features of Top MLOps Platforms

MLOps platforms have accurate model monitoring, automated workflows, and other features that enable integration with other platforms. To begin, these platforms offer real-time monitoring features to guarantee effective tracking and anomaly identification. Second, they automate every step of the ML lifecycle, from data collection and preparation to model training and deployment, which improves operational efficiency and accelerates delivery. Finally, integration with key tools or frameworks is guaranteed so that infrastructure can be maintained. This enables a transformative approach to effectively scaling AI initiatives across the organization.

How to Choose the Best MLOps Platform for Your Needs

While determining which MLOps platform works best for you, here are some components to bear in mind.

- Scalability and Flexibility – It is necessary that the organization doesn't outgrow the platform. It is also helpful if the platform integrates with your existing tools and workflows.

- Usability – Identify a platform which makes the entire machine learning life cycle easier. This includes data preparation along with model training, deployment, and monitoring.

- Automation Capabilities – Platforms which offer comprehensive automation of features are preferable, as they reduce manual work and speed up development cycles.

- Security and Compliance – The platform should meet industry standards along with containing security measures needed to manage sensitive data.

- Support and Community – Evaluate the options for dedicated help as well as the prevailing user community for support and direction.

- Cost-Effectiveness – The chosen solution should fit within the financial plan without losing necessary features.

Focusing on these components will place you in a position to meet your operational objectives with the most suitable MLOps platform.

Evaluating MLOps Tools and Platforms

While assessing MLOps tools and platforms, there are a few primary points captured in my research that matter to me. My first concern is how easy it is for my ‘work’ to be scaled and how flexible the platform is for my current and anticipated workloads. Next, I make sure that integration does not pose any challenges, as it is vital for my operational workflows. Third, I examine the integration of security features to determine if the platform can safeguard sensitive information. I also consider the support options available and the user reviews to further assess the credibility of the community-outreach services. Lastly, I evaluate the different pricing structures to determine which one best matches my requirements on the cost versus features spectrum. These considerations help me complete the decision-making process related to my project objectives.

Comparing Popular MLOps Platforms

While examining MLOps platforms, a few major issues crop up, focusing on their features, the level of scale a user can achieve, the possibility of their integration, and costs. An overview of the three platforms most of the times mentioned, AWS SageMaker, Google Vertex AI, and Azure Machine Learning, is provided below:

- AWS SageMaker

Functionality: Offers complete MLOps support, including data annotation, model development, deployment, and tracking. They offer Notebook instances that help to ease the process of testing.

Integration: Provides support for Amazon ecosystem services like integrating with S3, Lambda, and CloudWatch.

Scalability: It is very scalable and can train models on numerous instance types including options which are backed by GPU.

Technical Parameters:

- Training Instances Supported: CPU/GPU (P3, G5 types)

- Model Deployment Options: Real-time endpoints or batch transforms

- Pricing Model: Pay As You Go while billing for storage and compute overheads.

Strengths: It is secure, has great customization options, and is efficient for enterprise-scale operations.

Weakness: It is more complex in setup compared to other simpler platforms and more expensive retrogressively.

- Google Vertex AI

Functionality: Focused on optimizing machine learning life cycle to streamline steps like customizing, experimentation, deployment, as well as MLOps through AI Platform Pipelines.

Integration: Uses other Google Cloud services like BigQuery, Cloud Storage, and TensorFlow Extended.

Scalability: Fast resource scaling with support for AutoML and custom models.

Technical Parameters:

- Training and Prediction Options: Supports various options, including pre-trained and self-trained models.

- Pricing Model: Billed based on usage for prediction nodes, training nodes, and AI platform nodes.

- Strengths: Documentation is very helpful and capable users guided by Auto ML will sail through the use of it.

Weakness: Does not offer much flexibility to users outside the Google Cloud ecosystem.

- Azure Machine Learning

Functionality: Supports model training and deployment, and facilitates scalable MLOps workflows by default, integrated with enterprise-grade features.

Integration: Deep integration with Microsoft Ecosystem applications, such as Power BI and Azure DevOps, enhances efficiency.

Scalability: Allows AI models to be trained in a distributed manner and deployed on edge devices.

Technical Parameters:

- Compute Types Supported: Deployable on Azure VMs, AML compute cluster, and Kubernetes.

- Model Monitoring Features: Automatic monitoring and model drift detection features are available.

- Pricing Model: Subscription-based on the Azure services provided, computation, storage, and consumption.

Strengths: Enterprise-grade features fit for IT-centric businesses are a plus.

Weakness: Learning how to use the systems requires guidance, making it difficult for new users.

Summary Table of Comparison:

|

Feature |

AWS SageMaker |

Google Vertex AI |

Azure Machine Learning |

|---|---|---|---|

|

Ease of Use |

Moderate |

High |

Moderate |

|

Integration |

AWS Ecosystem |

Google Cloud |

Microsoft Azure |

|

Scalability |

Excellent |

Excellent |

Excellent |

|

Pricing Flexibility |

Good |

Good |

Good |

|

Best For |

Enterprises needing customizable solutions |

AutoML easy and fast experimentation |

Enterprises with Microsoft integration needs |

Each platform has strengths suited to specific use cases. For businesses that require high degrees of customizations, AWS sage maker will provide the best options. Google Vertex AI is dominating the automation and ease-of-use spectrum. Azure machine learning is unmatched for seamless integration across the Microsoft stack.

Understanding ML Pipeline and Integration Needs

While keeping in mind the requirements of ML pipelines, I try to see how a platform’s business objectives and technology infrastructure mesh with a specific technical framework. For instance, AWS SageMaker offers a high degree of customization, which is important if I have a higher level of control over modeling and deployment needs. Google Vertex AI beats the competition with its AutoML capabilities, as they allow fast experimentation and low manual work. Conversely, Azure Machine Learning has a remarkable feature of combining with other Microsoft products, which is helpful for businesses that depend on Azure DevOps or Office 365 in the business. In the end, complexities in my ML pipeline and the already available tools in my ecosystem make the decision on which platform to choose.

How Does MLOps Enhance the Machine Learning Lifecycle?

MLOps facilitates the working of AI and ML processes by automating the development, deployment, and management of ML models throughout their respective lifecycles. It enhances collaboration between data scientists and the operations personnel so that fast and accurate model building and deployment is achieved. MLOps improves efficiency and reduces manual errors using automated workflows for training, testing, integration, monitoring, and continuous delivery. It also allows guarantees scalability for the model and reproducibility within a dynamic production environment.

The Role of MLOps in Model Deployment

In my opinion, the MLOps paradigm is crucial for the effective implementation of machine learning models. It is the connecting factor between the data scientist and operations workstreams, which helps us in deploying models more reliably and efficiently. Automation of testing, integration, and other vital processes helps in reducing error margins and increasing the speed of delivery cycles. In my view, MLOps simplifies collaborative efforts, provides scalability and reproducibility, which is all important when trying to achieve the effectiveness of a model in the real world. With MLOps, we can continuously iterate and improve the models to ensure they consistently meet performance expectations.

Streamlining Model Training and Evaluation

Setting up clear and efficient workflows to facilitate model training and evaluation is vital to producing meaningful business impacts. Based on my experience, using methods such as hyperparameter optimization, pre-trained models, and good evaluation metrics makes meaningful reductions in time and effort. Automating such processes through TensorFlow and Pytorch pipelines guarantees results while reducing human error. Also, tracking experiments and outcomes with MLflow and Weights & Biases makes the process insightful and increases reproducibility. These practices boost effectiveness and scalability, which is highly desired in modern MLOps.

Integrating MLOps with Existing ML Workflows

MLOps Automation can be tricky, especially when integrating it into an existing system. From what I have learned, focusing on the automation of development, deployment, and monitoring of ML models yields the best results. Version controls like Git are a good start since code modifications will be recorded sequentially. Next, using ML-specific CI/CD tools (e.g., Kubeflow or GitLab CI) will automate the testing and deployment steps while also minimizing errors. Lastly, the use of Prometheus or Grafana adds real-time monitoring of model performance and system health. These steps are MLOps foundational and will enable efficiency and effectiveness while also making other considerations easier, like dataset scale, model latency, infrastructure scalability, and threshold checking for accuracy, precision, and recall ratios.

What Are the Best Practices for Implementing MLOps?

To properly implement MLOps, a certain level of infrastructure automation, collaboration, and robust systems is required. First, ensure that there is version control not only for the code but also for the data to make certain that reproducibility is possible. Second, workflows such as data preprocessing, model training, and even deployment pipelines ought to be automated as much as possible to minimize the manual effort needed, which subsequently diminishes the chances of human error. Third, allow and encourage greater collaboration across the disciplinary boundaries of data science, engineering, and operations for better alignment of communication and task objectives. In addition, proactively supervise models’ working conditions and provide a means for real-time capturing of their performance and drift, for example, by using Prometheus or Grafana. As a general MLOps rule, never disregard safety and compliance by following data protection policies while controlling information flow in the pipeline. These practices create efficiency, scalability, and reliability in any MLOps implementation.

Ensuring Continuous Integration and Deployment

To maximize automation on every stage of the pipeline and minimize hands-on involvement, along with the possibility of mistakes, I deploy everything using automation. I greatly rely on version control systems like Git because it is usually the most problematic part of integrating code changes frequently and ensuring every team member is in sync. Using automated tools to test the code quality offers the opportunity to address problems proactively. Docker, containerization platforms, and Kubernetes, an orchestration tool, help me deploy in a more efficient, scalable, and repeatable manner. The combination of these practices with containerization enables me to support reliability while ensuring the system is updated within reasonable periods due to the robust monitoring and logging frameworks, which can be relied on.

Managing Model Version and Metadata

Effective reproducibility of neural models begins with implementing systematic version control strategies and techniques. Whatever combination works best, adopting a versioning plan is necessary. One of the popular methods of version numbering is semantic versioning, like (v1.0.0 or v1.1.0), where the three digits correspond to the size of improvements made to the system over time. The primary digit considerably increases for major app updates, while the second decimal identifies minor adjustments made to the application, and the last number corresponds to bug fixes and patches. Organizing and storing model versions can be done with the Model Registry available in MLFlow and DVC.

For a seamless metadata layer, certain salient pieces of information can be implemented to enhance traceability and tracking. The salient aspects are:

- Model Name: What do you wish the model to be termed?

- Version: Semantic versioning is adopted, therefore: (v1.2.1)

- Training Data Details:

- Data set version or hash to enable data traces.

- The steps involved in preprocessing the data.

- Hyperparameters:

- Pacing factor, magnitude of compilation, number of epochs, etc.

- Evaluation Metrics:

- Model performance measures such as precision, recall, accuracy, and F1 score depend on the model posed case.

- Environment Details:

- The version of the framework or library, for example: Tensorflow 2.10, Pytorch 1.13

- Configuration of hardware - the GPU or CPU used for training.

- Deployment Status:

- An archived model in production or staging.

MLflow, ClearML, Kedro these tools have been combined and integrated into automated pipelines where all required metadata can be stored, managed, or retrieved. Having these enhances the management system in a model’s life cycle.

Utilizing Open-Source MLOps Tools

The introduction of open-source MLOps tools enables teams to better organize their machine learning workflows at a lower cost and in an effortless way. Tracking, versioning, and lifecycle management of the models and experiments become easier within the MLflow framework. On the other hand, Kubeflow is used to deploy and orchestrate machine learning pipelines on Kubernetes that need to be scalable, which is great for production environments. ClearML is a one-stop solution for tracking and orchestrating experiments, along with managing the data, and blends well into existing workflows. With the use of these tools, I ensure that throughout the entire model lifecycle, automation, reproducibility as well and scalability are achieved.

What Are the Top MLOps Tools and Platforms Available?

Today, we have some of the top tools and platforms for MLOps. They include:

- Kubeflow: A platform for deploying and managing ML workflows on Kubernetes that is highly popular due to its scalability and flexibility in production environments.

- Mlflow: A platform that has complete machine learning lifecycle capabilities, including tracking of experiments, model registration, and model deployment solutions.

- ClearML: Known for seamless integration into workflows, it offers a suite of services like experiment tracking, orchestration, and dataset management.

- DataRobot: An automated platform to perform several tasks in ML and also enables deployment. It is simple to use and quite scalable, which is a plus for any enterprise.

- Weights & Biases (W&B): This tool is tailored for collaboration between ML engineers and for tracking and visualizing performance metrics of experiments.

- TensorFlow Extended (TFX): This is a specific platform to TensorFlow built to manage production scale ML pipelines, which is quite effective.

- H2O.ai: A platform that has the capability of autoML for various tasks like model training and deployment for business purposes.

These tools can be combined or used individually, depending on the project-specific requirements, and cover different parts of the MLOps lifecycle.

Exploring Amazon SageMaker and Its Features

Amazon SageMaker is an AWS service that is fully managed which makes it easier to develop, train, and deploy machine learning (ML) models. The Service is aimed at improving productivity and minimizing the difficulty of maintaining ML workflows within organizations and helps advanced programmers and scientists by combining many tools and functionalities into a single service.

Key Features of Amazon SageMaker

- Built-in Algorithms and Framework Support

Amazon SageMaker has a number of built-in high performing ML algorithms suitable for big data. It also supports TensorFlow, PyTorch, and MXNet, giving users the option to bring their custom algorithms or pretrained models.

- Data Labeling with SageMaker Ground Truth

This feature applies machine learning to minimize manually intensive tasks while maximizing accuracy. Ground Truth speeds up static label creation for training data by automating and outsourcing portions of the task.

- Distributed Training for Scalability

Amazon SageMaker efficiently scales training jobs across several instances, using, for example, data parallelism and model parallelism. For instance, large models can be trained faster by splitting computing workloads across multiple GPUs and CPUs.

- Integrated Jupyter Notebooks

SageMaker offers comprehensive managed Jupyter notebooks that clients may use for exploratory data analysis and prototyping. These notebooks can utilize AWS services, allowing them to fetch and process data with ease.

- Hyperparameter Optimization

To automate model performance improvements, SageMaker employs hyperparameter tuning. It runs many training jobs with different parameters to determine the most suitable model configuration within the shortest time frame possible.

- SageMaker Studio

This Integrated Development Environment (IDE) enables users to build, train, debug, visualize, and deploy ML models in one single location. With SageMaker Studio, users can keep track of resource consumption and conveniently manage training jobs.

- Model Deployment and Monitoring

Users can easily deploy and serve models to production environments using endpoints with SageMaker's one-click deployment feature. The built-in A/B testing, automatic scaling, and other features help in simplifying live optimizations when serving models. Furthermore, it provides tools to measure performance metrics, such as latency and prediction accuracy.

- Augmented AI (A2I)

SageMaker A2I helps users with adding human review/oversight for workflows requiring higher accuracy, such as processing documents or fraud detection.

Reasonable Technical Parameters

- Instance Types: Supports a wide range of AWS EC2 instance types including GPU accelerated instances for training large models, such as ml.p2.xlarge and ml.p3.2xlarge.

- Storage: SageMaker connects with Amazon S3, enabling scalable data storage. Training jobs can work with datasets in an S3 bucket directly.

- Security: Pre-built integration with AWS Identity and Access Management (IAM) enables role-based access control. SageMaker allows encryption using AWS Key Management Service (KMS) as well.

- Training Speed/Scaling: SageMaker supports distributed training on multiple nodes using Horovod or other custom-built parallelization techniques.

- Optimization: Includes a variety of optimizers for model fine-tuning such as Adam, SGD, and Adaptive Learning Rate methods.

Incorporating all these functionalities with the robust infrastructure provided by AWS, helps Amazon SageMaker perform complex machine learning workflows effortlessly suiting any size of a business.

Overview of Google Cloud MLOps Solutions

Google Cloud provides a complete set of MLOps tools that aid in the deployment, management, and monitoring of machine learning models. Google Cloud offers Vertex AI, which provides integration throughout the entire ML workflow, from data collection to model serving. Automation can be achieved through Vertex AI's custom and pre-built pipelines, and data analysis and scaling is made easier with integrations from BigQuery. Furthermore, Google focuses on AI accountability by providing explainability and monitoring features to ensure that model performance and transparency is maintained. With these solutions, teams can easily operationalize ML at scale with minimal complexity and maximum efficiency.

Comparing Open-Source MLOps Tools and Platforms

Like with any other MLOps tools and platforms, while comparing them, assessing their integration, scalability, and technical capabilities is equally crucial. Keep in mind these metrics:

- Tracking and Version Control of Experiments

- Trackable Tools: MLflow and DVC

- Features: Allows tracking of experiments, datasets, and model version history for reproducibility.

- Key Parameters:

- Support for Git-based versioning.

- Integration with cloud and or on-premise storage solutions.

- Model Deployment

- Trackable Tools: TensorFlow Serving and KFServing

- Features: Enables serving models over APIs and offers flexibility on where models can be deployed, either on-prem or cloud.

- Key Parameters:

- Reduction of latency for real-time inference.

- Support for several frameworks (i.e., Tensorflow, Pytorch, XGBoost).

- Workflow Orchestration

- Trackable Tools: Kubeflow Pipelines and Apache Airflow

- Features: Ability to automate monitoring of machine learning workflows.

- Key Parameters:

- Support for Directed Acyclic Graph (DAG) based workflows.

- Integration with Kubernetes.

- Monitoring and Logging

- Trackable Tools: Prometheus, Grafana, and AI

- Features: Model performance monitoring as well as model drift detection.

- Key Parameters:

- Reality model drift warning notifications.

- Visual analytics dashboard.

- Scalability and Resource Management

- Trackable Tools: Ray, MLflow

- Features: Handle large-scale training or inference seamlessly.

- Key Parameters:

- Support for distributed training.

- Utilization of GPU/TPU for accelerated computing.

ML professionals can evaluate open-source tools within these parameters to choose a platform or toolset that meets the needs of their project while offering both convenience and effectiveness.

References

- MLOps Landscape in 2025: Top Tools and Platforms - Covers end-to-end MLOps platforms and their core features.

- 7 Best MLOps Tools [2025 Buyer's Guide] - A comprehensive guide to MLOps tools and their functionalities.

- 10 Best MLOps Platforms of 2025 - Reviews and insights on top MLOps platforms like Azure Machine Learning.

Frequently Asked Questions (FAQ)

Q: What is an end-to-end MLOps platform?

A: An end-to-end MLOps platform is a comprehensive solution that integrates tools and processes for managing the entire machine learning workflow, from data preparation and model development to deployment and monitoring of machine learning models in production.

Q: What are the benefits of MLOps for AI projects?

A: The benefits of MLOps include streamlined deployment of ML models, improved collaboration between data scientists and ML engineers, enhanced model monitoring and maintenance, and more efficient scaling of machine learning operations.

Q: How does AWS support MLOps?

A: AWS supports MLOps by providing a variety of tools and services like AWS SageMaker, which helps in building, training, and deploying machine learning models at scale. AWS offers APIs and integration options to manage the complete ML system.

Q: What is MLOps Level 1?

A: MLOps Level 1 refers to the basic level of machine learning operations maturity, where the focus is on automating ML model deployment processes and ensuring reproducibility of machine learning experiments.

Q: What is MLOps Level 2?

A: MLOps Level 2 involves more advanced practices, including continuous integration and deployment of machine learning models, as well as automated monitoring and management of models in production environments.

Q: Why is model metadata management important in MLOps?

A: Model metadata management tools are crucial for tracking and organizing data about machine learning models, such as version history, parameters, and performance metrics, which help in maintaining the quality and reliability of models deployed in production.

Q: How does MLflow contribute to MLOps?

A: MLflow is an open-source tool that aids in managing the machine learning lifecycle, including experimentation, reproducibility, and deployment. It provides tools for tracking experiments, packaging code into reproducible runs, and sharing and deploying models with a REST API.

Q: What challenges does an end-to-end MLOps platform address?

A: An end-to-end MLOps platform addresses challenges such as integrating diverse ML tools, ensuring a smooth transition from ML development to production, maintaining model performance, and facilitating collaboration across data science and ML teams.

Q: How do open-source tools fit into the MLOps landscape?

A: Open-source tools provide flexibility and customization options for organizations implementing MLOps practices. They can be used to build custom pipelines, manage ML workflows, and integrate with proprietary systems, contributing to a robust machine learning platform.

Q: What role does a feature platform play in MLOps?

A: A feature platform plays a crucial role in MLOps by providing tools to manage, store, and serve features for machine learning models. It ensures that models have access to accurate and up-to-date data, which is vital for maintaining performance and reliability in production.