Creating large language models (LLMs) is one of the most complex problems solved so far in artifi cial intelligence. These models process and create human-like language. They can perform multiple tasks, such as responding to questions, summarizing content, writing articles, and translating between various languages. Their multidimensional functionality has led to increased adoption in different sectors, academic fields, and among the general public.

This post aims to arm readers with concrete information about sophisticated language models, the technologies and methods used in their development, and what they might be able to do in the future. First, I will discuss the most essential ideas of language models, like the deep learning techniques neural networks employ. After that, we will look closely at the training methodology and the model’s comprehension and generation of text formats in human language. In the end, we’ll be able to cover the possible practical usages of LLMs, the social concerns surrounding their use, and what restrictions LLMs might place on AI. After reading this guide, you will understand the basic concepts of large language models and how disruptive technology will be to our world.

What are Large Language Model?

AI has come a long way, especially when discussing LLMs or large language models. You can even converse normally with computers; they will respond in human language. LLMs immerse themselves in significant volumes of data, and this knowledge helps them understand words, phrases, and even sentences. Neural networks are compelling computing systems, and with these tools, LLMs can translate information, summarize it, and create new texts. Because of their meticulous engineering, deferred information is predicted and postulated meaningfully through the user's input. The possibilities for using LLMs are boundless.

Understanding Natural Language Processing

Universal Language Processing is a domain that interlinks human language and computing. Understanding the significance of the translation, NLP helps machines communicate with humans more effectively. It plays a vital role in sentiment analysis, conversing robots, text summarization, and translation. Without a doubt, Unsupervised Learning technologies powered with large datasets and modern algorithms make it possible to deeply comprehend languages, context, and the sentiment behind the words. NLP generates and comprehends results, thus modern systems based on LLMs such as chatbots get devised to automate solving everyday problems related to human language. Don’t hesitate to ask for further support!

How Machine Learning Models Enable Language Understanding

Computer software's ability to comprehend language using machine learning models is made possible through sophisticated algorithms, training data, and computation methods. Neural networks, including the transformer architecture models known as BERT and GPT, are at the heart of these models. These frameworks scan enormous volumes of text to comprehend how meaning and context interrelate semantically.

Factors You Should not Omit:

- Training Dataset Size: To train models accurately and effectively, high-quality, heterogeneous datasets, such as Common Crawl and Wikipedia, which contain billions of words, are required.

- Model Architecture: The most prominent model is a transformer model, which has features like attention layers for analyzing the importance of the words in the context. For example,

- layer count (for instance, 12 for the BERT-Base model, 24 for GPT-3)

- hidden-unit dimension (such as 768 for BERT-Base and 12,288 for GPT-3)

- Tokenization: To prepare input text for the model, the text is split into smaller sections known as tokens, such as WordPiece or Byte Pair Encoding. This method guarantees that any representation on the model achieves subword level and is flexible.

- Hyper Parameters:

- Rate of learning (usually varies between the values 1e-5 and 5e-4)

- Size of the batch {32, 64 or any more significant number if training distributed)

- The number of epochs in the training processes; this estimate fluctuates with the size of the dataset but is typically 3 to 10

- Loss Function: In sequence prediction problems, models frequently apply cross-entropy loss associated with the AdamW optimization strategy for gradient updating.

- Pretraining and Fine Tuning:

- Pretraining requires unsupervised training on big corpora to capture basic language systems.

- Fine-tuning requires application of the pretrained model to specific tasks, like sentiment detection or summarization, through supervision with labeled data.

With these components, machine learning models accomplish specific language comprehension tasks, like question answering, summarization, or sentence formation, with high accuracy. Their flexibility and scalability support these models being at the core of contemporary NLP systems.

The Role of Neural Networks in LLMs

Neural networks underpin the functioning of large language models (LLMs), enabling the processes of text comprehension and generation in a human-like manner. These models primarily depend on transformer neural networks that optimize self-attention capabilities when dealing with sequential data. The self-attention-based architecture, proposed by Vaswani et al. in 2017, enhances the traditional recurrent neural networks (RNNs) by allowing parallelized input processing, which increases the speed and scope of computing even further.

Some of the neural network components within LLMs include but are not limited to:

- Self-Attention Mechanism: Evaluates the relevance of distinct words in a sequence to facilitate the model’s comprehension of contextual dynamics.

- Multi-Layer Perceptrons (MLPs) add non-linearity to the model’s decision-making process through stepwise transformations and predictions in layers.

- Embedding Layers: Enable text rendering into a numerical representation by converting input tokens to dense subset fixed-size vectors.

- Residual Connections and Normalization: Protect against the disappearing and exploding gradient problem whilst preserving the flow of the gradient during backpropagation.

Traditional LLMs usually encompass these parameters:

- Model Size:

- GPT-4 (estimated): ~175-200 billion parameters

- BERT-Large: 340 million parameters

- Training Data:

- Models were trained on datasets spanning several domains, including books, articles, and code, which total more than 400 billion tokens.

- Compute Resources:

- Training periods usually require thousands of parallel GPUs within supercomputers.

- The required GPU hours for pretraining might vary from thousands to even millions.

- Optimization Algorithms:

- Efficiently, the learning process is adjusted by optimizers, e.g., AdamW.

- Large batch sizes (512-8192) and learning rate modifier (warm-up or decay) schedule guaranteed stable training.

LLMs' designs are exceptionally versatile. They allow the rapid generation and comprehension of intricate language and facilitate their diverse functions, including serving as conversational AI or providing thorough text summaries.

How Do Large Language Models Work?

The mechanisms used in LLMs enable them to comprehend and produce text by drawing from a significant bank of training data. These models are based on artificial neural networks, specifically transformer models, which process and encode contextually complete input text. Models of this type depend on attention, which allows them to concentrate on particular sections of the text and forecast words, phrases, sentences, or even whole passages, including translations and summaries. Considerable efforts are spent on the training stage, where billions of parameters are adjusted based on the patterns in data to increase accuracy and adapt to various language tasks.

The Mechanics of Transformer Models

Using self-attention control features in transformer models enables them to assign value to words about one another and their context when processing text. A subset of text related to a specific concept or idea is called a token and is stored within the most recently opened folder. Transformers, capable of operating in parallel mode, are unprecedentedly fast and remarkably adept at summarizing text generation and translation tasks. Performance efficiency highly depends on the scale and the quality of the training set a model is exposed to, and great quantities of parameters have to be adjusted within the model to recognize and understand the functions and implement them in the multi-language tasks.

Training with Large Amounts of Data

Training sophisticated AI models requires data sets with high volumes and genera, both in terms of the nature of their contents and the range of language they use. In my understanding, extensive and varied data sets enable models to identify more profound and complex patterns that will allow these models to enhance precision. Data set size, batch size, and the number of parameters a model possesses are some of the key technical aspects. For example, when training transformers such as GPT (which has 175 parameters), a suitable batch size of 256 to 2048 is required in conjunction with a text corpus containing information from different fields. In addition, there is a requirement for these parameters to be optimally set to avoid overutilization of resources when the model is being trained without leading to overfitting.

The Importance of Fine-Tuning in LLMs

The ability to perform fine-tuning on large language-centered models is crucial because it permits shifting a general-purpose model to specific tasks or domains. With them being trained on smaller granulated data sets with particular tasks, accuracy, and relevance can be maximized while ensuring that overfitting does not occur from the larger sets. This approach brings the model to the required performance level to be used or applied while avoiding the exhaustive training processes in terms of time and computational power needed. Furthermore, fine-tuning ensures that better alignment with user needs is created to make the solutions more effective and easy to use.

What are the Use Cases for LLMs?

LLMs have countless applications. These technologies can be employed in different areas, such as content development, where articles, marketing copies, and emails must be generated. In customer support, chatbots that need to provide automatic and precise answers are developed. Moderately complex LLMs offer instant translation of phrases, which helps overcome language barriers. LLMs also assist in the summarization of texts and extracting information from them. Because of their ability to comprehend human languages, they are also effective in aiding virtual assistants, recommending tools, personalized engagements, and educative devices.

Applications in Natural Language Generation

LLMS possesses astonishing Natural Language Generation (NLG) capacity, making various applications possible. It can generate coherent and context-sensitive text for content writing, report generation, storytelling, and automated customer service communication. Advanced structures, such as OpenAI’s GPT or Google’s T5, easily provide both long and short fluent content.

Some of the significant technical parameters that relate to performance and NLG are:

- Model Size: The dimensions of a model are measured in terms of the number of its parameters, which controls the sophistication and coherence of the text. The best example is GPT-3, whose parameters are 175 billion, which produces overly fluent text.

- Training Data Volume: The quality and variety of training data primarily determine the model's ability to generalize. GPT-4 is an example of such a model, as it was trained on enormous multi-faceted datasets of books, articles, and Internet content.

- Temperature: This value determines the degree of randomness of responses (0-1). Higher values produce more creative results, while lower values produce predictable results.

- Top-k and Top-p Sampling: The integration of top-k sampling (limiting the word choices to k highest probable words) and nucleus sampling (top-p which allows a set probability of dynamic words) helps in optimizing originality and coherency of the text.

- Fine-tuning: LLMs can be fine-tuned with domain-specific data to aid in targeted applications to perform better and be aligned with specific needs within the industry.

Through LLMs, NLG automates monotonous writing across all industries to produce personalized work while fostering innovation and coping with immense language variety. The promise for further advancement is still intact.

Utilizing LLMs for Sentiment Analysis

Employing LLMs sentiment analyses is done by interpreting many texts that define emotions or sentiments. With pre-trained models offered to us, identifying whether texts are positive, negative, or neutral becomes very easy and accurate. This method is crucial when analyzing customer feedback, keeping track of social media posts, and conducting market analysis because it makes detecting crucial information from unstructured texts possible. As technology progresses, models capture more context-specific and sophisticated sentiment language, enhancing performance in sentiment analysis tasks.

Enhancing Conversational AI with LLMs

With the aid of Large Language Models and LLMs, it's possible to achieve exceptionally fluid and contextual interactions, making a hugely critical difference in Conversational AI. These automata utilize parameters, such as large-scale pre-trained datasets, attention-based techniques, and transformer architectures, to synthesize and process human-like dialogue. For a successful integration in Conversational AI, a few technical parameters made a mark:

- Model size and parameters: Models with billions of parameters, like 175 billion in GPT-3, are deeply contextual, yet such deep models are resource-intensive computationally.

- Pre-training datasets: Effective handling of disparate dialog scenarios and domains is skilled with diverse and splendid datasets.

- Fine-tuning: Custom fine-tuning on specific data allows task-efficient LLM adaptation to customer support, healthcare, finance, etc.

- Latency optimization: Pruning or quantizing models enables efficient inference speeds for real-time responsiveness in conversational systems, which is crucial in maintaining the seamless flow of the conversation.

- Context window size: Enhanced conversations require proper control over coherence, which is enabled by a broader context window (4k to 8k tokens).

Well-tuned LLM parameters result in accurate, dependable, and flexible responsiveness from ever-evolving Conversational AI systems, meeting user expectations effectively.

How are LLMs Trained?

The procedures necessary for training large language models involve:

- The initial step is Data Collection: Large language models (LLMs) operate by training them using different datasets, including text materials such as books, websites, and other written documents that serve linguistics for contextual knowledge and understanding with context intertwining.

- Secondly, there is a step known as Preprocessing. During this step, all relevant data is sanitized and adequately prepared by eliminating duplicates, cleaning irrelevant text, and tidying issues and inconsistencies to guarantee the highest-quality data input for training.

- Then there is Model Architecture: LLMs such as GPTs are constructed using cutting edge technology such as the transformers, the LLMs can learns the relationships that exists in combined data processes efficiently.

- Then, there is a minutely outlined training process: LLMs are trained through monitored learning and reinforcement learning, and because learning is supervised, significant computational resources are required. Optimization techniques such as gradient descent are utilized to correct prediction errors.

- Then comes the Fine Tuning stage: A model receives the mid-level training and might use domain specific or detailed task data to enable the model perform better to achieve its objective.

- Lastly, Evaluation and Validation follows: The acquired model is analyzed and evaluated by different datasets to test its efficiency and coherence as well as accuracy to a high degree and how well it can generalive different tasks.

With these maelon hundred workflows, it remains unquestionable in deploying LLMs in AI systems that engage users, creates purposeful and context centered user responses.

The Process of Data Collection and Preparation

The data collection focuses on obtaining high-quality datasets from various trustworthy sources to train a language model effectively. Collecting text from books, articles, and websites into a corpus involves abiding by various ethical and legal limitations such as copyright laws. After collecting the datasets, they are cleaned and preprocessed to eliminate noise, inconsistencies, or irrelevant information. Tokenization, normalization, and character filtration are some of the commonly used techniques for preprocessing. This helps structure the model inputs to foster a better understanding and improve its performance.

Understanding Generative Pre-trained Models

GPT and other Generative Pre-trained Models implement unsupervised learning on a large volume of language data to understand context and coherently produce sentences. These algorithms consist of several transformer blocks, a type of neural network architecture for sequential data. Technical metrics characterizing these models include:

- Depth (the number of layers): 12, 24, or more (as in the case of GPT-3, which has 96 layers on the most significant model).

- Width (hidden units per layer): In larger models, 768 to 12,288, specifying the amount of information processed by the given level.

- Model practitioners allocate 12, 24, or more attention heads and can quickly focus pay attention to input sequence relationships.

- The diversity in text data is often hundreds of gigabytes to terabytes, commonly called the training data size.

- Ranging from millions to hundreds of billions enabling nuanced understanding and generation capabilities are model parameters (example: GPT-3 with 175 billion parameters stands unparalleled).

- It refers to a tokenization method, breaking a text into smaller pieces while retaining its meaning, BPE or similar approach uses Byte Pair Encoding.

Generative models realize astounding versatility about language understanding and content creation by pre-training followed by task specific fine-tuning along with adjusting these parameters.

Challenges in Training Large Models

The challenges encountered when training large-scale generative models many on computational, data, and environmental problems:

- Computational Resource Requirements

Like every large model, GPT-3 and GPT-4 require many. This makes it imperative to have hundreds, if not thousands, of high-range GPUs or TPUs functioning simultaneously.

- Extensive Data Needs

Another facet of these models is the massive, high-quality, and diverse datasets required to train them. The datasets must be balanced across multiple domains, such as books, dialogue, news, and even code, to avoid niche or biased content. Coding these datasets adds more complexity due to their tedious nature, such as tokenization and de-duplication.

- Optimization and Memory

With the introduction of billions of parameters, optimizing the paradigm becomes more complex, with challenges such as memory bottlenecking and gradient vanishing/explosion. Megatron-LM also extensively uses memory management, mixed precision training, decentralized training methods, and gradient checkpointing.

- Cost and Energy Consumption

The persistent issue of high energy consumption continues to be of concern. For example, the energy cost of training GPT-3 is estimated to be in the millions. This cost affects the projects' budgets and carbon impacts, and there is a clear need for more energy-efficient algorithms and low-carbon AI solutions.

- Overfitting and Generalization

When large models are trained with limited or biased datasets, they are highly susceptible to overfitting and may not generalize well. To address this, dropout regularization, augmenting the data, and adding diversity to the dataset in the preprocessing phase can be employed.

- Ethical and Bias Challenges

Unfiltered biased models reproduce feedback loops that enhance stereotypes. To eliminate such factors, adversarially trained inclusive data curation and post-deployment auditing are needed.

Implementing these advanced techniques, while at the same time being mindful of the design, will allow the construction of effective and ethical large language models.

What are the Benefits of Large Language Models?

Artificial Intelligence technology has revolutionized numerous industries for the most part because of the fundamental benefits it offers. To begin with, understanding and producing human language-like text is a specialty of these systems, allowing them to use NLP techniques to develop chatters, virtual aides, and even self-writing programs. Moreover, how they can digest vast amounts of data makes them essential for summarizing information, translating, or researching. Besides, these AI models help users change their language and help those in need, which enhances accessibility. Moreover, these models have supported businesses by enabling better customer relation management and integrating non-business functions. These models are required in any industry or sector due to the innovation and productivity they provide, which is greatly needed in today’s technology-heavy world.

Improving Human-Machine Interaction

To promote interaction between humans and machines, systems need to be built that are user-friendly, proactive, and can easily be modified. The first step towards accomplishing this is understanding the advancements of NLP, which can help facilitate easier communication by adequately interpreting the user’s input and the context surrounding it. Second, the adaptive learning models can also improve the overall experience of the individual user over time. This can be achieved by integrating new parameters that help the system adapt to individual user behaviors. Specific technical limitations must still be respected, such as average latency that should not exceed 300ms and achieving over 90% accuracy in core NLP functions like speech recognition or text analysis. Furthermore, the datasets used must focus on diversity to mitigate biases. These priorities would improve human-machine interaction, from effectiveness to user-friendliness.

Advancements in Text Generation Capabilities

The progress in text generation capabilities has been phenomenal, especially with the emergence of new AI systems like GPT, whose context relevance and accuracy are nearly one hundred percent. The technology uses large datasets alongside a transformer structure that aids in understanding grammatical particulars of language, making it useful in content generation, customer relations, education, and many more. Also, the active work for bias elimination coherence and contextual understanding enhancement guarantees the reliability of the tools in the most versatile situations.

Facilitating Multimodal Model Applications

Multimodal systems employ algorithms to automatically analyze and interpret information from different sources, including text, videos, images, and audio, providing more prosperous and well-rounded results. Introducing Multi-Modal Systems improves the execution of tasks such as interactive multimedia content development, visual question responses, and dialog systems. Recent advancements highlight user-friendliness, adaptability in real-time, and interactivity, thus enabling progress in fields like medicine and education, as well as entertainment. Balancing the acceleration of technology development and applying ethical principles is a challenge that needs to be addressed to provide better and more creative outcomes.

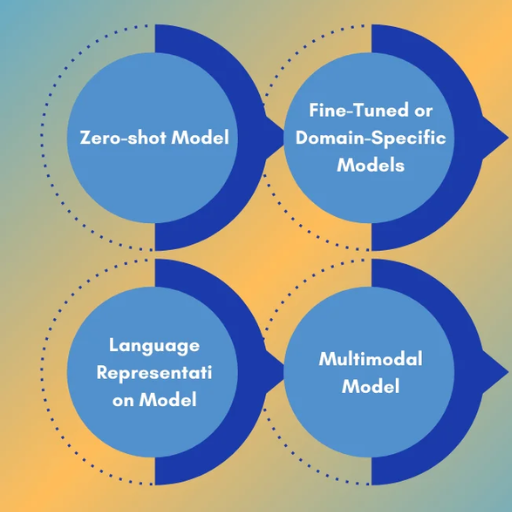

How Do LLMs Compare to Other AI Models?

Although Large Language Models (LLMs) can process and generate human-like text, they stand apart in their construction and intended use from other AI models. LLMs have been fed enormous amounts of text, which translates to them being effective at various tasks, including but not limited to translation, summarization, and conversational interface creation. Relative to other AI models with a clear focus, such as vision and audio interfaces, LLMs do not have actual Multimodal capabilities unless integrated with peripheral systems. Different models may outperform them in areas like the recognition of images or the processing of audio. Still, the strength of LLMs is the flexibility they offer in language tasks across various industries.

Differences Between LLMs and Traditional Models

- Training Dataset

- A wide range of human-like text is drawn from different sources for inclusion in the dataset and this is precisely the reason as to why LLMs have broad contextual understanding and human-like language generation capabilities.

- Usually, more trivial and uncomplicated models are created using simple, singular datasets specific to one domain or an area of a task.

- Scale and Parameters

- Large language models usually have billions of parameters. These models enable the user to manage better and deal with more complicated language and contextual issues. For instance, GPT-4 is estimated to have over 175 billion parameters.

- General-purpose models are designed with fewer parameters as they work more efficiently and accurately. Fewer parameters mean there is no scope for generalization in multiple contexts.

- Application Focus

- Apart from industry-specific applications, LLMs can perform text summarization, translation, and sentiment analysis competently.

- Traditional models are exceptionally good at automating procedures or classifying rules.

- Multimodality

- LLMs can be trained to generate and process text data and work with other data types.

- Traditional models can handle non-textual data, such as images or audio, as they are specially designed for other modalities, such as vision.

- Performance

- LLMs capitalize on transfer learning, which ensures proficient performance on a wide range of tasks. However, unless adequate fine-tuning is performed, LLMs may fall short in particular use cases.

- Due to optimization, traditional models usually outperform LLMs in specific tasks but cannot quickly shift to other tasks.

This analysis has shown that LLMs are more flexible and proficient in language, while traditional models are better in efficiency and specialized tasks. Which one to choose greatly depends on the targeted problem type and available computation resources.

The Evolution from Machine Learning to Deep Learning

Shifting from machine learning to deep learning indicates a change in the processes involved in data management and modeling. Machine learning processes involve feature engineering, which involves humans with in-depth knowledge identifying and defining the most relevant features for a specific task. On the other hand, deep learning automates this process by using multi-layered neural networks (the "deep" part) that learn and extract features through direct interaction with raw data.

In this case, the key technical parameters that differentiate the two systems are model complexity, amount of data needed, and power. Effortless to more complex learning structures within deep learning like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) have dozens of millions of parameters and need to be trained on large datasets so that they do not end up overfitting, but simpler algorithms like decision trees or support vector machines (SVMs) are less demanding with smaller datasets. Because deep learning is more complex, it also requires greater computational power, most often needing to harness GPUs or TPUs to allow efficient training from operations such as backpropagation and gradient descent optimization.

The advancement of deep learning modles has made possible the breakthroughs of image recognition, natural language processing, and autonomous systems, showcasing the profound ability of deep learning in managing intricate high dimensional data.

Future Prospects for AI Chatbots and LLMs

Prospects for AI chatbots and large language models (LLMs) technology are exceedingly bright. These technologies will keep changing sectors by improving customer support, automating mundane processes, and facilitating advanced interaction. As deep learning progresses, generative models’ context comprehension will improve, enabling even more advanced communications in models like GPT-4. However, ethical considerations, bias in training data, and scarce computation resources remain significant challenges. Striking the balance between harnessing these technologies' personalization and knowledge-creation power while solving these problems will define the next innovation cycle.

References

- AWS: What is LLM? - Large Language Models Explained

- Medium: How Large Language Models Work. From zero to ChatGPT

- Understanding AI: Large language models, explained with a minimum of math

Frequently Asked Questions (FAQ)

Q: What is an introduction to large language models?

A: Large language models (LLMs) are artificial intelligence designed to understand and generate human language. These models are trained on large datasets and use advanced algorithms to predict the next word in a sequence, allowing them to generate text, translate languages, and perform other language-related tasks.

Q: How are language models trained?

A: Language models are trained using vast amounts of text data. This training involves feeding the model large datasets containing diverse examples of human language. During training, the model learns to recognize patterns and structures in the data, which allows it to generate coherent and contextually appropriate text.

Q: What are some everyday use cases for LLMs?

A: LLMs can be used in various applications such as chatbots like ChatGPT, language translation, content creation, and summarization. They are also employed in programming assistance, where they help generate code snippets based on a provided prompt. Additionally, LLMs are used in research to analyze unstructured data and explore new AI capabilities.

Q: How do LLMs work in generative AI?

A: In generative AI, LLMs take a prompt as input and predict the next word or sequence of words to generate coherent text. This process is similar to how the human brain processes language. The model uses its training data to understand the context and produce text aligned with the input prompt.

Q: What is the role of tokens in LLMs?

A: Tokens are the basic units of text that LLMs process. Each word or part of a word is converted into a token when processing text. The model uses these tokens to analyze and generate language, efficiently handling large and complex text pieces.

Q: How do models like ChatGPT use LLMs?

A: Models like ChatGPT use LLMs to understand and generate human-like text responses in real time. They are trained on extensive datasets to learn the intricacies of language and can engage in conversations, answer questions, and provide information based on user input.

Q: What are the capabilities of language models?

A: The capabilities of language models include generating coherent text, translating languages, summarizing information, and understanding context in a conversation. They can also assist in creative writing, automate customer support, and provide insights from large datasets. As foundation models, their capabilities continue to expand with advancements in AI research.

Q: Why is training data necessary for LLM performance?

A: Training data is crucial for LLM performance as it provides the foundation for learning language patterns and behaviors. The quality and diversity of the data used to train these models directly impact their ability to generate accurate and contextually appropriate text. High-quality data leads to better model performance and more reliable outputs.

Q: Can LLMs be used for language translation?

A: Yes, LLMs can be used for language translation. They analyze the source text, understand its context, and generate a translation in the target language. This process leverages the model's ability to predict the next word or sequence of words in both languages, facilitating accurate translations.