Understanding Large Language Models Explained: How LLMs like ChatGPT Work & Transform AI

Advancements in artificial intelligence have now been made easier with the help of Large language models (LLMs), enabling machines to process, generate, and comprehend human language remarkably. ChatGPT is one of the examples. Programs like these are loaded with deep learning architectures trained with massive chunks of written data and trained enough to carry out numerous tasks related to natural language processing. This blog will explain the technologies behind LLMs, ranging from their developmental process to how they will transform the customer service industry, creative writing, and much more. After reading, I will be able to summarize the process of LLMs, including their practical features, possible applications, and threats to the future of AI.

What are Large Language Models Explained (LLMs), and How Do They Work?

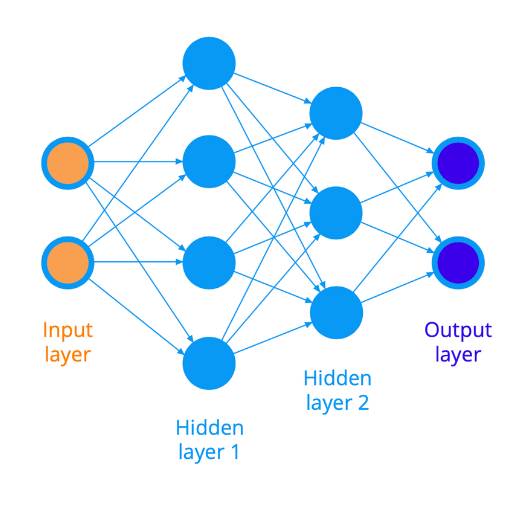

Large Language Models (LLMs) are sophisticated AI models designed to comprehend and produce human language. Their operations entail examining vast amounts of text and obtaining patterns, meanings, and interrelations among words and concepts. They utilize neural network structures, particularly transformers, such as those devised in GPT, to execute language functions by splitting speech into tokens and estimating the subsequent token in the order. Making these predictions enables the models to display fluidity and relevance in the produced text. LLMs are supported by millions and even billions of parameters that facilitate the performance of complex tasks, such as translation, summarization, and answering questions. Nonetheless, this complexity presents other problems like bias, interpretation, and heavy resource demands.

The Evolution of Language Models in Artificial Intelligence

The past decade has perhaps seen the most significant transformations in the field of artificial intelligence, especially in the area of developing language models. Innovations in deep learning and neural networks have primarily fueled this rapid progress. Traditional approaches to AI focused on incorporating set rules and statistics to create algorithms that lacked contextual depth. With the development of the transformer architecture, particularly GPT, the ability of computers to process and generate human language has significantly improved. Models now use large datasets and billions of parameters for more sophisticated tasks like summarizing, translating, and answering complex questions with great accuracy. However, work is still needed to ensure broader accessibility and equity by solving issues related to bias, high computational resources, and opacity of the models’ functionality. There is still much active research to be done in this latter area.

How Transformer Architecture Powers Modern LLMs

Self-attention computing transforms the entire process of generating output texts that resemble human language into an easy job if done with cutting-edge Large Language Models (LLMs). Evolving from deep neural networks, self-attention methods derive from the concepts presented in the 2017 “Attention is All You Need” paper. Self-attention computing stems from gradual advancements in effective and manageable language data processing analysis replete with fundamental structures like positional encoding and self-attention mechanisms.

Key Components of Transformer Architecture:

- Self-Attention Mechanism

A self-attention mechanism is integral to determining each word's relative importance against the other words in the same sequence, which is the case in human languages. It ensures that certain words capture context-rich relationships irrespective of their positional distance within sentences.

- Equation: Attention(Q, K, V) = softmax(QKᵀ / √d_k) V

- Parameters:

- The embeddings of input text produce the Q (Query), K (Key), and V (Value) matrices.

- For almost every implementation, d_k is the dimensionality of keys and queries and is defined to be 64.

- Positional Encoding

To provide context for the order of words in a sentence, positional encoding is added into input embeddings to help the model process a sequence. Transformers lack recurrent mechanisms like the ones used in RNNs, so these encodings are essential for their functionalities.

- Where pos indicates a specific position, the following encoding formulas give:

- PE(pos, 2i) = sin(pos / 10000^(2i/d_model))

- PE(pos, 2i+1) = cos(pos / 10000^(2i/d_model))

- The scale of the model defines d_model values from 256 to 2048.

- Multi-Head Attention

Transformers have multiple heads to simultaneously attend to different input parts instead of having attention defined through a single mechanism. Each of them differs in how they attend to information, which helps the model comprehend the complexity of patterns.

- Standard Parameterization:

- h (number of heads): 8 or 16 (widely used in GPT and BERT families).

- After the attention ‘heads’ are applied, the outputs are combined into one output.

- Feed-Forward Networks (FFN)

A position-wise FFN is applied to every position with each transformer layer, encapsulated by two linear transformations and a ReLU nonlinearity.

- FFN definition: FFN(x)= W2(max(0, xW1+b1))+b2

- In FFNs, the hidden layer's size is usually 4 times the d_model size.

- Layer Normalization and Residual Connections

To enhance the gradient flow and control the convergence during training, layer normalization and residual connections around the sub-layers are utilized.

Benefits of LLMs:

- Scalability: Self-attention enables parallelization, which helps Transformers scale tremendously to large datasets with massive parameter numbers. This feature suits models such as GPT-4 and PaLM.

- Generalization: This architecture can perform numerous NLP tasks without additional tuning within a specific domain.

- Performance: Implementing Transformers in LLMs practically guarantees success and superiority in achieving benchmarks such as GLUE and SuperGLUE.

Real World Examples:

- GPT-3 (175 billion parameters): Generates a set of coherent and diverse texts using stacked transformer layers.

- BERT (340 million parameters): Utilizes bidirectional attention to perform classification and recognition tasks.

- Palm (540 billion parameters): Uses extended transformer layers for superior performance in many languages.

In summary, the modular and efficient design of the transformer still drives AI innovation.

How LLMs Predict the Next Word to Generate Human-like Text

LLMs rely on colossal amounts of text data to identify patterns, contexts, and relationships to predict the next probable word. LLMs can generate coherent and contextually plausible prose one word at a time when employing other deep learning tools, such as the self-attention mechanisms that are a key constituent of the Transformer architecture. Instead of strings of sentences having ma eaning that can be construed differently, LLMs rely on statistical models, enabling them to craft seemingly human-like responses.

Breaking Down the Training Process of Large Language Models

Developing a large language model involves several critical steps. First, a large dataset contains texts from books, articles, and websites covering various subjects and themes. That dataset is then subjected to a cleansing process, which eliminates very low-quality or irrelevant data. During training, the model uses the self-attention mechanism of the Transformer architecture to recognize patterns and relationships within the text being processed. The model uses the prior context for any word to determine what word it wants to use next in the prediction sequence. It progressively improves by reducing the gap between its predictions and the actual data. This stage is revisited numerous times over and over in an iterative procedure, with the models self-adjusting in performance with billions of parameters. In the end, some assessments and adjustments of the outputs are made, sometimes including specific datasets so the model produces contextually relevant, coherent outputs.

How LLMs are Trained on Massive Datasets

To develop large language models (LLMs), one must combine extensive datasets and sophisticated computing equipment to reach desired levels of understanding and perfection. The procedure usually begins with compiling relevant raw data, including text from books, articles, websites, and academic papers. After the data is collected, it undergoes pre-processing, which involves cleansing, tokenization, formatting, and eliminating duplicates.

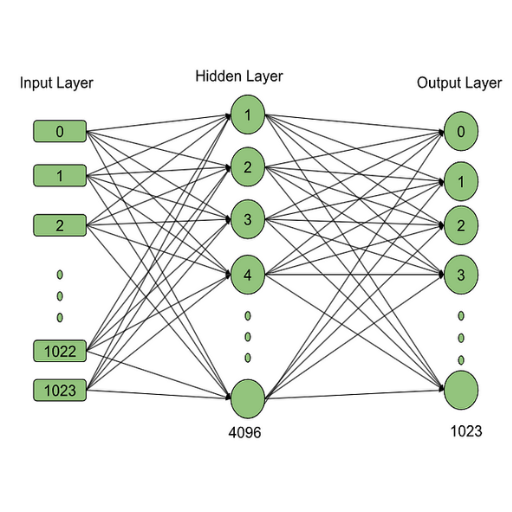

The core training process adopts the transformer architecture, which includes attention systems to focus on relevant word choices within the provided context. Important factors which arise in the training of LLM are:

- Number of Parameters: Current models of LLM, like the GPT variants, have parameters counting in the billions, such as GPT-3, which ranges above 175 billion. Such parameters allow models to understand relationships in different languages.

- Batch Size: A sampled range of 128 to 1024 is usually set per iteration to strike a balance between ideal performance and efficiency.

- Learning Rate: This determines the amount a models parameters are altered during training, often it is started at an approximate value of 1e-4 with decline over time.

- Training Steps: Training can take millions of steps based on the size of the set, and advanced equipment can be needed in a time range from weeks to months.

- Hardware: Training LLMs involves using powerful supercomputers with GPUs or TPUs. Common examples include the Nvidia A100 GPUs or Google’s TPUs, which are especially suited for large-scale matrix multiplications.

The model is trained to predict the next word given the previous n-grams, enhancing its skill through backpropagation and gradient descent optimization. The system is adjusted using specialized datasets or in-context learning for summarization, translation, questioning, or answering tasks.

In the end, using perplexity as a predictive performance evaluation metric and real-world task tests verifies that the model outputs make sense and are accurate.

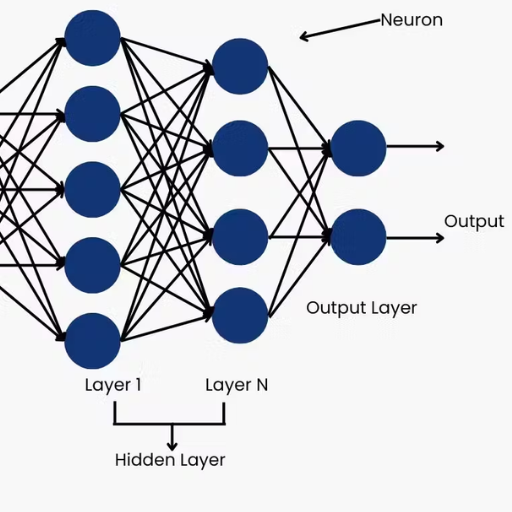

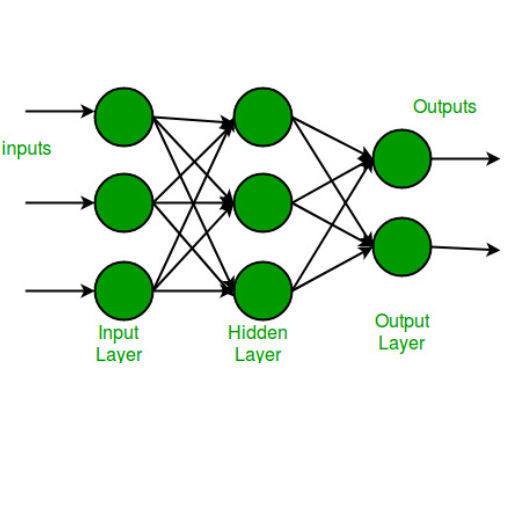

The Role of Deep Learning in Language Model Development

Deep learning contributes significantly to the development of language models by allowing them to learn patterns and relationships in extensive textual data. I use neural networks, specifically transformer architectures like GPT and BERT, to train these models to accept data sequences and respond with the most appropriate outputs. Some crucial technical details that must be tackled on this train of thought are the number of layers (12 for smaller models; state-of-the-art ones can have 96), hidden layer size (which can vary between 768 and 12288), and number of attention heads (which is usually between 12 and 96). Moreover, the amount of training data, which is several hundreds of gigabytes to a few terabytes of text, provides a balanced portrayal of different linguistics is another factor. Changing parameters while training, using methods like Adam optimizer and gradient clipping, results in lower perplexity and improved effectiveness in the context of language tasks.

Why LLMs Require Enormous Computing Resources

The computing resources needed to build and operate large language models (LLMs) are staggering due to their structure and scale. These models require billions and even trillions of parameters to be stored and processed, which involves enormous memory and processing power. Also, the datasets are often measured in terabytes, meaning that high-end hardware such as GPUs and TPUs are needed to store and process the data. Moreover, iterative optimization techniques like backpropagation and gradient descent, which must be calculated alongside other parameters, significantly increase resource demand during training. Even after the models have been trained, there is still the issue of accessing vast amounts of computational power to deliver real-time answers during inference. Running a model at scale always requires significant computing resources.

Key Capabilities and Limitations of Modern LLMs

Key Capabilities

Modern large language models (LLMs) can do them astonishingly well in an array of activities. These highly advanced models can produce human-like speech or text, perform translations or interpret the text, create a summary of the text, and even answer questions relevant to the context. These models considerably understand the context behind the prompt and produce suitable outputs. Such models are also helpful in executing tasks such as sentiment analysis, content creation, and even code generation. Furthermore, LLMs prove their effectiveness in various industries by being fine-tuned on appropriate domain datasets where they deploy specialized techniques.

Key Limitations

Regardless of these models' advantages, some deficiencies come hand in hand with modern LLMs. These models profoundly depend on the quantity and quality of their training datasets; owing to existing biases, the output could be inaccurate or wrong. These models are not human, meaning they do not 'think' per se. As a result, they could provide some responses that sound reasonable but are utterly untrue. On top of all this, LLMs are costly to train and deploy, decreasing the chances of access and scalability. Ensuring these systems' unethical and privacy use can cloud the healthcare and finance industries. The capabilities and limitations of LLMs must be balanced to get their benefits without causing harm.

Natural Language Processing and Understanding Abilities

Natural language processing (NLP) allows machines to understand and respond to human language. Communicative human language can be translated into something a machine comprehends. NLP uses text analysis procedures like tokenization, parsing, and text analysis recognition (NER), among others, to dissect the entire context of a sentence. It also uses machine learning models like transformers, the backbone of remarkable creations like OpenAI’s GPT or Google’s BERT.

The key technical aspects ofNLP makes uses text analysis procedures like tokenization, parsing, and text analysis recognition (NER), among others, to dissect the entire context of a sentence.

- Model Size ( e.g., number of parameters)

- GPT-4 (Estimation): 1.5 trillion parameters

- BERT base model: 110 million parameters

- BERT large model: 340 million parameters

- Learning Data

- Models are pretrained on various datasets, including books, websites, and publically available corpuses to capture language and context around it rather accurately.

- Processing Power

- Models need many computational resources (GPUs or TPUs) to manage training and inference smoothly. As an example, GPT-4 needs several petaflops worth of computational power to be able to train.

NLP has proven beneficial in constructing, translating, sentiment analysis, chatbot design, and formulation of summaries. These tasks can be worked on through model fine-tuning, which helps meet specific standards for more specialized domains. There are, however, still problems with contextual uncertainty, moral deployment, and biases concerning language representation. These are significant issues that still go unaddressed and put attention towards making NP advancements more equitable and ethical.

Common Challenges and Limitations of Current LLMs

From my understanding, current LLMs have a couple of significant limitations. First, they tend to have some contextual accuracy issues, meaning that their outputs are sometimes plausible at first glance but are verifiably false or illogical. Second, they also show biases based on their training data, which can further propagate harmful stereotypes or unfair portrayals. Finally, there is also the issue of resource intensive; training and deploying these models need tremendous computational power and energy, which raises issues regarding their economic and environmental impact. Overcoming these issues requires significant advancements in ethical training practices, model architecture, bias reduction processes, and efficiency augmentation techniques.

The Difference Between General and Specialized Language Models

GPT and BERT are general language models that can simultaneously process and accomplish multiple tasks. These models can generate coherent text on various topics because they are trained on large-scale datasets from different fields and areas. However, they lack depth due to domain knowledge, making them depend on other highly skilled professionals to assist them with specific and highly technical tasks.

Unlike general language models, specialized language models are proficient in specific applications and industries. These models are trained using domain-specific data, which equips them with deep knowledge and understanding of that field, such as healthcare or finance. Consequently, these models become more accurate and relevant, although their use is restricted to a specific context. Because of their limited utility scope, they are not as flexible as general models.

Choosing a specialized or generalized model comes down to the context and requirements. Specialized models outperform general models when the task requires a specific skill or in-depth knowledge and attention. However, general models are more adaptable and user-friendly as they can be utilized without much preparation or resources.

Popular LLMs Like ChatGPT: Architecture and Innovations

Trained with data until now, we can confirm that the transformer model architecture introduced in 2017 is what is used in advanced technologies like ChatGPT. By deploying the self-attention mechanism, the transformer architecture can analyze relationships among all tokens, allowing models to generate and process human text accurately.

Detailed pre-training on many datasets is one of the many innovations distinguishing them from the rest, providing them with unrivaled foundational knowledge. This is further coupled with specific task tuning to increase relevance and accuracy. Additionally, the scale at which these models are built is unmatched. Modern LLMs contain billions of parameters – a feature that allows them to achieve stellar results across myriad complex tasks.

Versatility and power are the defining features of modern LLMs, allowing bridges to be built between general-purpose and specialized model features. These models strive to cater to user input across contexts, a feature that will enable them to be regarded as integral components in AI.

How ChatGPT and GPT Models Revolutionized AI

Understanding these concepts would aid in comprehending that ChatGPT and other models have drastically changed AI as we see it. These models' heart lies in the innovation of natural language processing and deep learning. Even the self-attention and positional encoding methods introduced as building blocks of the transformer model were revolutionary. These features offer an edge in how the models make sense of the context and ensure relevant and coherent text production.

One central element is the magnitude of the parameters incorporated and their scales. For example, models like GPT-3 have 175 billion parameters, making it possible to achieve great finesse in comprehending and producing human language. In addition, training using enormous datasets, typically in the hundreds of gigabytes or more, makes it possible for these models to possess comprehensive knowledge in numerous fields.

The progression of techniques for model fine-tuning also becomes another reason why the model is more flexible and can quickly adapt to specific actions with minimal changes while retaining a reasonable generalization level. Combined with the recent advancements inthe scalability of distributed computing and optimization algorithms, these models have become more proficient in automated content creation, customer service, and even scientific research, integrating significant breakthroughs in AI technology.

Comparing Different Types of Neural Network Architectures

Network types differ fundamentally in their architecture and purpose. Here are some of the common types and their main characteristics:

- Feedforward Neural Networks (FNNs)

- Overview: These are the primary neural networks in which the information flow is unidirectional, moving from the input node to the output node through one or more hidden nodes.

- Applications: Suitable for image classification and recognition tasks.

- Key Parameters:

- Quantities of hidden layers and the number of neurons in each layer.

- Activation functions assignment (for example, ReLU, TanH, sigmoid).

- Learning rate for training optimization set.

- Convolutional Neural Networks (CNNs)

- Overview: CNNs are designed to use convolutional layers to detect patterns in data with spatial hierarchies, such as images. They are designed to process grid-like data.

- Applications: Medical imaging analysis, image and video recognition, and object detection heuristics.

- Key Parameters:

- Kernel dimensions, for example, 3x3 or 5x5.

- Stride and bias.

- The volume of the convolution and pooling layers.

- Recurrent Neural Networks (RNNs)

- Overview: Sequential-dependent data is well catered for by these networks because they can remember previous inputs due to internal loops.

- Applications: Time series prediction, natural language processing (NLP), and speech recognition.

- Key Parameters:

- Hidden state dimensions.

- Sequence length (time steps).

- LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) models improve long-term memory retention.

- Transformers

- Overview: transformers use self-attention techniques, allowing them to consider all input data simultaneously instead of one after the other, permitting the process to be completed in parallel.

- Applications: They are the top performers in NLP problems (including but not limited to machine translation and summarization) and are starting to lead in computer vision (Vision Transformers – ViTs).

- Key Parameters:

- The amount of attention heads.

- The size of the hidden layer and the size of the hidden layer's tessellated areas.

- The rate of dropout to overcome overfitting.

- Generative Adversarial Networks (GANs)

- Overview: a GAN is a set of networks comprised of a generator and a discriminator. It aims to produce new data samples within a particular dataset.

- Applications: Functions in image generation, data augmentation, and super-resolution.

- Key Parameters:

- Size of the latent vector.

- Both the generator and the discriminator's learning rates.

- Amount of layers allocated in each network.

- Graph Neural Networks (GNNs)

- Overview: GNNs have been developed to work with data structured as a set of vertices and edges; they can capture spatial relationships and dependencies in non-Euclidean spaces.

- Applications: Performed social network analysis, recommendation services, and molecular property prediction.

- Key Parameters:

- Count of graph convolution layers.

- Size of node embeddings.

- Aggregation mechanism (e.g., sum, mean, max).

Each type of neural network architecture is constructed to be proficient in some areas using its architectural shape and computation processes. The architecture selection needs to account for the dataset's attention focus and the work's defined objective.

The Evolution from Basic to Multimodal Language Models

Language models have evolved from simple frameworks to the complex and sophisticated multi-modal systems we have today. These models were text-focused and used statistical approaches, including n-grams or simple embeddings, to perform different language-based computations. With breakthrough technologies like self-attention and transformer models, language models could capture more of the context, allowing them to produce better-quality outputs.

The shift towards multimodal language models included new types of inputs, such as text, visuals, and audio recordings. OpenAI’s GPT-4 and Google’s Bard are examples of these new models that combine different data processing and output-generating capabilities by integrating different machine learning modalities. This shift makes it possible to respond to more sophisticated interactions, such as formulating responses to questions in the form of images along with text or providing descriptive captions to images. There is an increased emphasis on developing systems that are capable of understanding and responding to human inputs in a more natural way.

Real-World Applications and Use Cases for LLMs

The versatility and efficacy of Large Language Models (LLMs) are displayed in various industries, such as:

- Customer Support Automation

With the application of LLMs, chatbots and virtual assistants can enable automation and ensure human-level conversational support. They can also provide automated support for frequently asked questions, troubleshoot problems, and assist on a round-the-clock basis.

- Content Generation and Editing

LLMs assist in generating new content and editing existing material, from posts to more technical documents. They are invaluable where monotonous writing tasks must be performed while ensuring language and tone consistency throughout the document.

- Language Translation

Contextual and accurate language translation is among the principal functions of any LLM, and these models possess that functionality, along with helping people and organizations communicate without any barriers whatsoever.

- Educational Tools

To make education accessible and adapated, LLMs personalize the learning experience through tutoring, answering questions, and providing simplified learning to complex ideas.

- Creative Applications

Writers, poets, and painters use LLMs to get ideas or even assist in generating detailed descriptions of the artwork and images.

- Healthcare Assistants

Summarizing medical documents and reports, explaining test results, and answering patients¿ basic questions are some of the many uses of LLMs in the healthcare sector. LLMs have opened up new ways to present information to assist professionals, but they should not be considered a substitute.

- Analyses and Interpretation of Information

These technologies assist companies in deciphering enormous volumes of unstructured text data by summarizing reports, conducting sentiment analysis, and efficiently extracting meaningful information.

LLMs have changed how we use technology and enable systems to complete tasks previously done only by a human brain—their versatility guarantees they will be deployed further across functions with changing requirements.

How Businesses Leverage LLMs for Productivity and Innovation

According to my findings, businesses have begun adopting LLMs to enhance productivity and innovation in numerous ways. These models replace mundane tasks such as customer service interactions with automated chatbots and virtual assistants, leading to lower turnaround times and increased customer satisfaction. In addition, a growing number of organizations rely on LLMs for data analytics as they are capable of trend analysis, summarization of complex reports, and extracting useful information through intricate data processing. In other cases, LLMs are deployed for creative exercises like content creation, product and service advertisement, or brainstorming, thus proving their versatility across various sectors. Using LLMs allows companies to redefine the workflow processes to become more productive and responsive to the market dynamics.

Creative and Content Generation Applications

LLMs are effective in content development and other creative efforts. Their flexibility and accuracy are unmatched. The responses provided feature short-form answers along with the requisite technical details.

- What kinds of output are possible from an LLM?

LLMs, or Large Language Models, can generate a wide range of content, such as blog articles, social media posts, marketing pitches, and fictional content. Their flexibility stems from tone and style modification due to prior training on multiple datasets.

- How do LLMs maintain content relevance and accuracy?

Fine-tuning domain-specific datasets and using prompt optimization aids in relevance and accuracy. Other parameters that affect the output are context window size, for instance, 4096 tokens or more, and temperature settings, for example, 0.7, to not overly stifle creativity.

- What is the standard measure of productivity in LLM content creation?

In addition to translation, BLEU is commonly accepted as the best metric,, while ROUGE is better suited for summarization. Human evaluation is also absolutely critical when defining creativity and coherence.

- Can LLMs facilitate content creation in more than one language?

Of course. Languages embedded in the databases that train LLMs, like GPT-4 or Bard, allow these models to create content in different languages. Essential factors in this example are token embeddings and multilingual capabilities with shared language mappings.

- How do LLMs achieve style or brand-specific adaption?

An adaptation can be done by fine-tuning an instruction-driven prompt with the company’s data. Using embeddings about a brand’s voice guarantees flexible stylistic accuracy and consistency alignment.

Regarding token size, temperature, or training datasets, LLMs offer practical solutions for modern industries while showcasing their creative capabilities.

Programming and Technical Use Cases for Language Models

The application of language models is vast and includes almost all areas of engineering and computing, wherein productivity and problem-solving are optimized to high levels.

- Programming and Testing Automation

In various programming languages, GPT-4 can assist in creating code fragments, restructuring previously written code, and suggesting possible solutions within the scope of addressing particular coding problems. For instance:

- Prompt: Write a Python function that sorts a list employing the merge sort algorithm.

- Output: Properly executing a code block by applying the merge sort technique.

- Key Parameters:

- Context length (specifies the amount of relevant code or text the model uses).

- Token size (specifies the intricacy of the produced code).

- API Integration and Documentation

LLMs can help create documentation and provide context for complex API integration tasks. A developer can give API details, and the model will provide an explanation and even sample code demonstrating how the API can be used.

- Better results can be attained when domain-specific APIs fine-tune the model.

- Technical Parameters:

- Lower temperature for consistent and less-detailed documentation (explicitness).

- Fine-tuned dataset specific to the API domain.

- Test Case Generation

Language models can generate unit or automated test cases, saving developers time when going through the software development lifecycle.

- Sample Prompt: “Create unit tests for a JavaScript function that checks the validity of email addresses provided by users.”

- Key Parameters:

- A low temperature to guarantee non-variability for test generation systems which need to be dependable.

- An increased quantity of training samples guarantees domain-specific ones.

- Data Analysis and Transformation

Language models can assist in data transformation and manipulation, including writing code or SQL queries, and generate natural language to code. In particular, users may be able to describe their queries, and the model will construct sophisticated SQL queries to extract the pertinent information.

- Technical Parameters:

- Maximum tokens size allows to capture all elements of the query.

- Zero or one-shot learning for quick adaptation to the new datasets.

Incorporating LLM capabilities in these areas assists the coders in optimizing processes, eliminating tedious work, enhancing quality, and maintaining the uniformity of the code. Combining fine-tuned training datasets and releasable model features guarantees practical and custom-prepared solutions for programming challenges.

The Future of Large Language Models in AI Development

The future of large language models(LLMs) in AI development depends on improving accessibility without loss of efficiency. Broad adoption of AI in diverse industries – healthcare and education included – will be made possible due to further progress in LLMs. They will strive to be less resource-intensive, possibly adopting more compact and efficient yet capable structures. This guarantees their broader utilization without existing models' staggering expense and ecological ramifications.

Improving Customization and Accuracy

A significant concentration will be put on Customization, enabling LLMs to be configured for specific tasks and domains with little need for additional data. Such flexibility guarantees greater precision and relevance in narrowly defined legal or scientific research fields. Heaps of effort will also be put into better-preset configurations, like zero or few-shot learning, which enable new task performance, minimizing development effort and time.

Ethical Considerations and Bias Mitigation

Responsible development of LLMs will focus on bias and transparency issues, among other ethical considerations. Such models will probably be created with frameworks that guarantee AI systems' fairness, security, explainability, and trust. Joint work between governments, academia, industries, and societal sectors will outline standard policies to keep the pace of AI development in line with societal values.

Ultimately, transforming LLMs will focus on crafting flexible, dependable, reliable, ethical instruments to enhance every sector of life. This will open endless opportunities for innovation with the help of AI.

References

- AWS: What is LLM? - Large Language Models Explained

- Medium: How Large Language Models Work. From zero to ChatGPT

- The Guardian: How AI chatbots like ChatGPT or Bard work

Frequently Asked Questions (FAQ)

Q: How do large language models work, and what makes them so advanced?

A: Large language models (LLMs) work through a process of statistical pattern recognition on massive text datasets. They function by analyzing relationships between words and phrases in human language, learning to predict what text should come next given a particular context. The transformer model architecture, first introduced in 2017, powers modern LLMs like ChatGPT. This architecture enables the model to process text in parallel rather than sequentially, giving attention to simultaneously different parts of the input. What makes LLMs so advanced is their scale—larger models with billions or trillions of parameters can capture more nuanced patterns in language understanding and generation. These models are trained on large, diverse datasets comprising books, articles, websites, and code, allowing them to generate coherent and contextually relevant responses across numerous domains.

Q: What is a transformer model, and why is it essential for LLMs?

A: A transformer model is a neural network architecture revolutionizing natural language processing tasks. It's the foundation of all modern LLMs like ChatGPT, GPT-4, and LLaMA. The transformer's key innovation is its "attention mechanism," which allows the model to weigh the importance of different words about each other regardless of their position in a sentence. This enables the model to understand context much better than previous architectures. Transformers process text as tokens (pieces of words or characters) and can handle multiple relationships within text simultaneously, making them highly efficient. The architecture also allows for massive scaling, which is crucial because increasing the model size generally improves capabilities. Without the transformer architecture, the current generation of large language models wouldn't be possible, as it's what enables them to generate human language that feels natural and contextually appropriate.

Q: How are LLMs trained, and what kind of data do they use?

A: LLMs are trained through unsupervised learning, where they learn to predict the next word in a sequence based on previous words. The training process involves feeding the model enormous amounts of text data—often hundreds of billions of words. This training data typically includes diverse sources like books, articles, websites, social media, code repositories, and other text from the internet. During training, the machine learning model adjusts its internal parameters (which can number in the billions or trillions) to predict text patterns better. After this initial pre-training phase, many models undergo fine-tuning with human feedback to improve safety, helpfulness, and accuracy. The quality and diversity of training data significantly impact an LLM's performance and biases. Models like GPT-4 are trained on data that includes multiple languages and programming languages, allowing them to understand and generate code and natural language content.

Q: How do LLMs work when generating responses to user queries?

A: When generating responses, LLMs work through a process called inference. First, the user's input (prompt) is tokenized and broken into pieces that the model can process. The LLM then uses its trained parameters to predict the most likely next token based on the context provided. This prediction is probabilistic; the model may choose the most probable next word or sample from several potential candidates. The model repeats this process, generating one token at a time, with each new token becoming part of the context for predicting the next one. What makes this process remarkable is that the model uses its learned language patterns to create thoughtful and contextually appropriate responses, even though it's fundamentally performing statistical prediction. The quality of responses depends on several factors, including prompt design, model size, and training data quality. During generation, various parameters like temperature control how creative or conservative the outputs are. Despite their impressive capabilities, it's important to remember that LLMs don't truly "understand" content like humans do—they're pattern-matching systems.

Q: What capabilities do modern LLMs have beyond just generating text?

A: Modern LLMs have evolved far beyond simple text generation. They can summarize lengthy documents, translate between languages, answer questions based on provided content, and even exhibit reasoning capabilities to solve complex problems step by step. Many LLMs can write and debug code across various programming languages, create different creative content types, and adapt their writing style to match specific requirements. Advanced models like GPT-4 show emergent abilities—capabilities that weren't explicitly trained for but developed at certain scale thresholds. Some newer systems are multimodal models, able to process and generate content involving images, audio, or video alongside text. LLMs can also be fine-tuned for specialized tasks like medical diagnosis assistance or legal document analysis. They're increasingly used as components in larger AI systems, serving as the language understanding and generation layer for applications ranging from virtual assistants to content creation tools. Despite these impressive capabilities, an LLM may still struggle with tasks requiring real-world grounding or up-to-date knowledge beyond its training data.

Q: What are the limitations of LLMs that users should be aware of?

A: Despite their impressive capabilities, LLMs have significant limitations. They don't truly understand content—they predict statistical patterns in text without comprehending meaning like humans do. LLMs can produce "hallucinations" (confidently stated but factually incorrect information) because they prioritize fluent responses over accuracy when uncertain. Their knowledge is limited to their training data cutoff date, making them unable to access current information without additional tools. These AI models struggle with complex reasoning, mathematics, and tasks requiring real-world verification. LLMs may perpetuate biases in their training data, potentially producing harmful or discriminatory outputs. They lack agency or consciousness—they cannot form intentions or desires. Users should approach LLM outputs critically, verifying important information from authoritative sources, especially for consequential health, finance, or legal decisions. Understanding these limitations helps set appropriate expectations for what these powerful but imperfect tools can reliably deliver.

Q: How do transformer-based LLMs differ from earlier language models?

A: Transformer-based LLMs represent a quantum leap from earlier language models in several ways. Previous models like recurrent neural networks (RNNs) processed text sequentially, word by word, limiting their ability to capture long-range dependencies in language. The transformer architecture processes entire sequences in parallel through its attention mechanism, dramatically improving efficiency and language understanding. Earlier models typically had millions of parameters, while modern LLMs have billions or trillions, enabling much more sophisticated pattern recognition. Pre-transformer models struggled with generating long, coherent text that maintained context, whereas transformer-based LLMs can produce extensive, contextually consistent content. The earlier generation of language models required more task-specific training, while transformer LLMs demonstrate remarkable few-shot and zero-shot learning capabilities—they can perform tasks with minimal or no specific examples. This architectural revolution has enabled natural language generation that is qualitatively different, with outputs that frequently appear human-like in their coherence, relevance, and apparent understanding of complex concepts.

Q: How can businesses and developers effectively use LLMs in their applications?

A: Businesses and developers can effectively use LLMs by understanding their capabilities and limitations. For implementation, they can use API access to existing models like GPT-4 or deploy open-source models like LLaMA or Falcon. LLMs excel as flexible components in larger systems—they can generate content, analyze text, translate languages, and serve as natural language interfaces. Prompt engineering is crucial; well-crafted prompts with clear instructions, examples, and context significantly improve outputs. Implementing retrieval-augmented generation (RAG) helps overcome LLMs' knowledge limitations by supplementing them with current or domain-specific information for many applications. Developers should build feedback loops to improve system performance and implement safeguards against potentially harmful outputs. Cost optimization is essential since running larger models can be expensive—choosing appropriate model sizes for specific tasks can reduce expenses. Finally, ethical considerations must be addressed, including obtaining consent when using customer data, ensuring outputs are appropriately attributed, and implementing guardrails against misuse. The most successful implementations typically combine LLMs with other AI and traditional software components rather than relying on them exclusively.

Q: What is the future for large language models and generative AI?

A: The future of large language models and generative AI promises rapid evolution in several directions. We'll likely see more efficient models that deliver similar capabilities with fewer computational resources, making this technology more accessible and environmentally sustainable. Multimodal models that seamlessly work across text, images, audio, and video will become increasingly sophisticated, enabling richer interactions. Specialization will advance with domain-specific models optimized for medicine, law, and scientific research. Integration with external tools and APIs will allow LLMs to interact with the world, access real-time information, and perform actions beyond text generation. Personalization will improve as models better adapt to individual users' needs and communication styles. Ethical and governance frameworks will mature, addressing concerns around misinformation, bias, and appropriate use cases. Perhaps most significantly, we'll see a more profound integration of LLMs into everyday software, making natural language interfaces ubiquitous across applications. While artificial general intelligence remains a distant goal, incremental improvements in reasoning, factuality, and safety will continue to expand what these systems can reliably accomplish.

Q: How can I evaluate whether an LLM is the right solution for my problem?

A: To evaluate if an LLM is right for your problem, assess whether your task primarily involves language understanding or generation. LLMs excel at content creation, summarization, classification, translation, and conversational interfaces. Consider your accuracy requirements—if your application needs high precision (like medical diagnosis or legal compliance), an LLM may need significant augmentation with retrieval systems and human review. Evaluate data privacy concerns, as sending sensitive information to external API-based models may not be acceptable for all use cases. Assess cost implications in terms of direct costs for commercial models and computational resources for self-hosted solutions. Consider latency requirements, as some applications may not tolerate the response times of larger models. Examine whether your problem needs current information beyond the model's training cutoff or domain-specific knowledge. Finally, run practical experiments with representative examples from your specific use case to measure performance. The most effective solutions often combine LLMs with other components like databases, search engines, or specialized algorithms rather than relying solely on the language model. This balanced approach leverages the strengths of LLMs while compensating for their limitations.